Dataset

DummyDataset(Dataset)

name2dataset[self.cfg['train_dataset_type']](self.cfg['train_dataset_cfg'], True)

相当于:DummyDataset(self.cfg['train_dataset_cfg'], True)

- is_train:

__getitem__ : return {}__len__ : return 99999999

- else:

__getitem__ : return {'index': index}__len__ : return self.test_num

not is_train:1

2

3

4

5

6

7

8if not is_train:

# return 一个实例 eg:GlossyRealDatabase(self.cfg['database_name'])

database = parse_database_name(self.cfg['database_name'])

# 使用database中的get_img_ids方法,获取train_ids和test_ids

train_ids, test_ids = get_database_split(database, 'validation')

self.train_num = len(train_ids)

self.test_num = len(test_ids)

print('val' , is_train)

database.py:

- parse_database_name

1 | def parse_database_name(database_name:str)->BaseDatabase: |

- get_database_split

- 打乱self.img_ids,并split为test和train

1 | def get_database_split(database: BaseDatabase, split_type='validation'): |

GlossyRealDatabase

init

- meta_info

1 | meta_info={ |

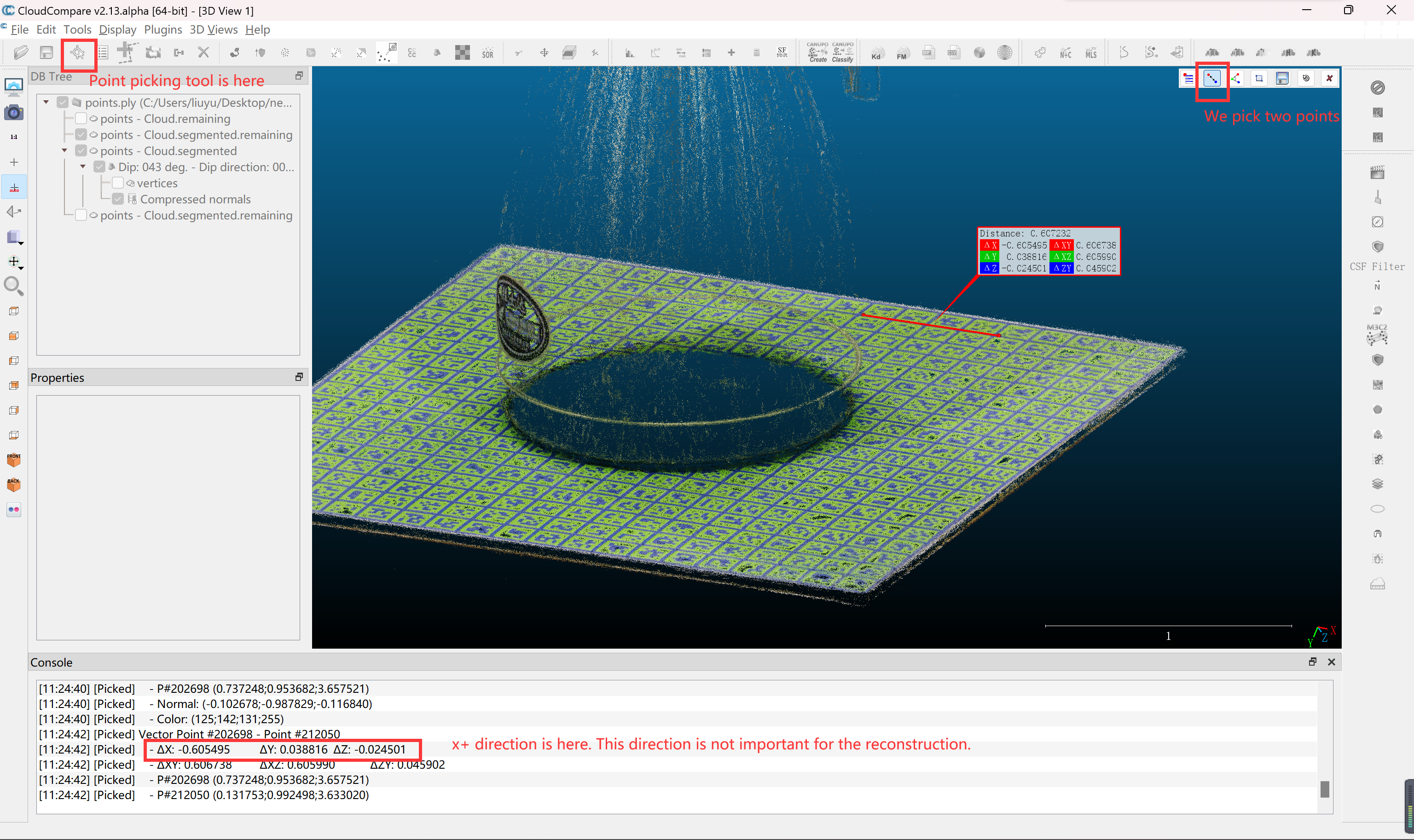

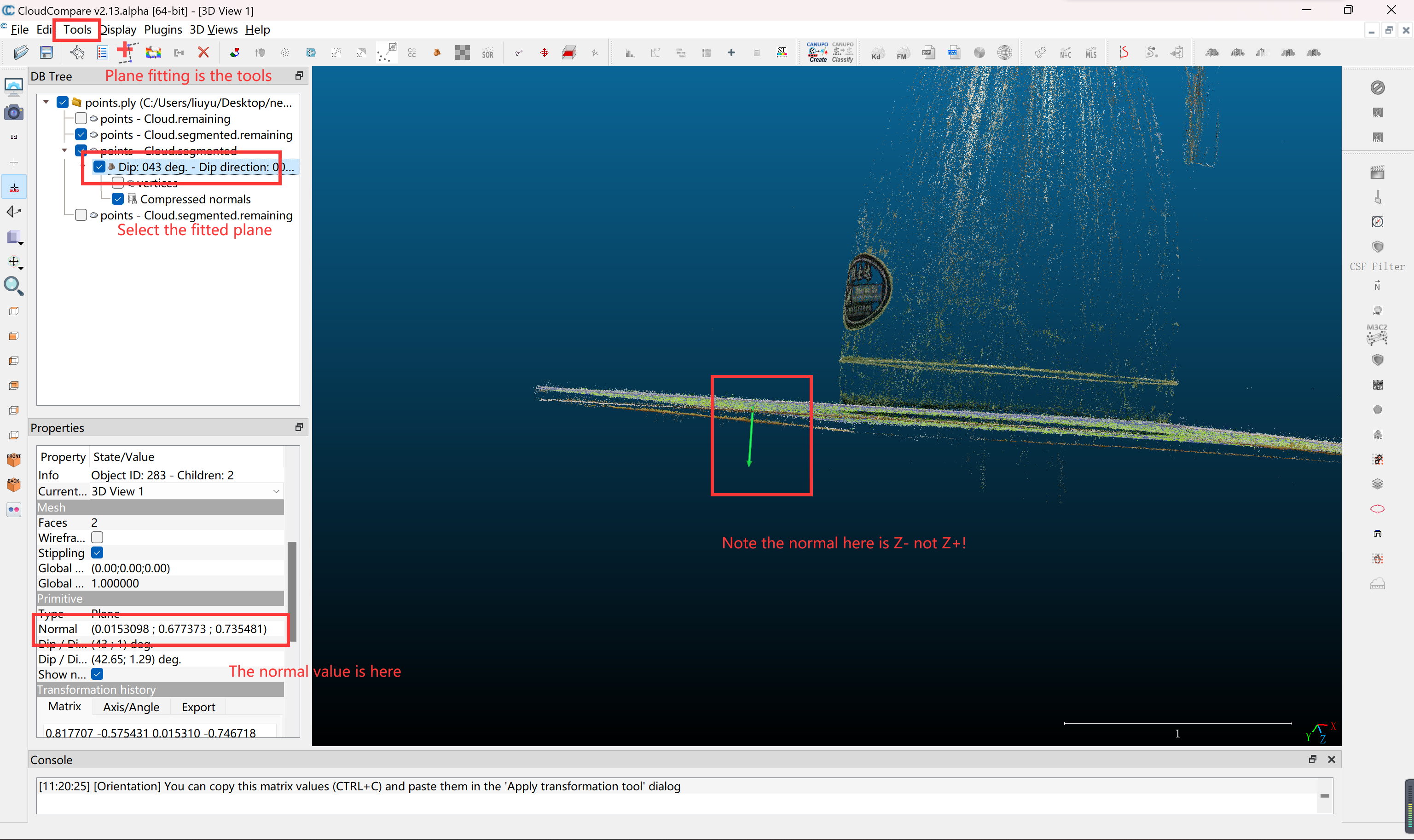

从CloudCampare中获取的数据,其中forward是手动设置的前向,up是根据手动截取的一小块平面的法向

NeRO/custom_object.md at main · liuyuan-pal/NeRO (github.com)

_parse_colmap从cache.pkl中读取数据,如果cache.pkl不存在,则从/colmap/sparse/0中读取并将数据写入到cache.pkl中- self.poses (3,4), self.Ks (3,3), self.image_names(dict is img_ids : img_name), self.img_ids(list len is num_images)

_normalize读取object_point_cloud.ply,获得截取出来的物体点云坐标,将该点云坐标标准化到单位bound中,并将世界基坐标系转换到手动设置的坐标系即up、forward- 即

_normalize将点云坐标从原来的世界坐标系w转换到新的坐标系w’下 - 将pose的w2c数据转换为w’2c,其中w’原点在object_point_cloud.ply中心,xyz轴是自定义的轴,如上图,但是scale不变

- 即

database_name: real/bear/raw_1024中最后一个raw_1024- 如果以raw开头:

- 将images中图片缩放并保存到images_raw_1024中

- 并更换self.Ks中数据(由于缩放了W、H)

- 如果以raw开头:

1 | """ |

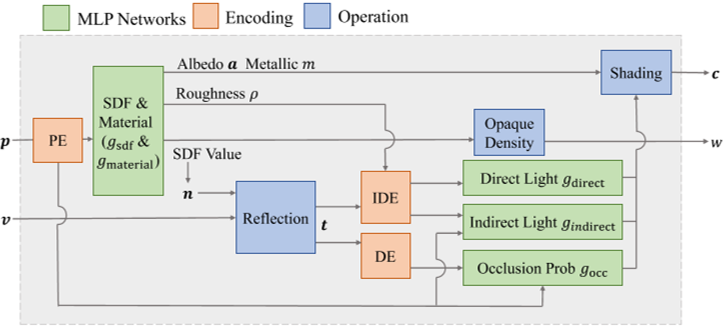

Network&Render

NeROShapeRenderer

MLP

SDFNetwork

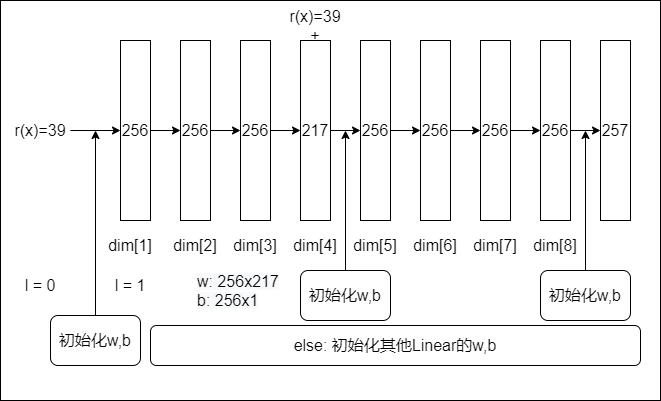

- 39—>256—>256—>256—>217—>256—>256—>256—>256—>257

NeRFNetwork

- 84—>256—>256—>256—>256—>256+84—>256—>256—>256+27—>128—>3

- 84—>256—>256—>256—>256—>256+84—>256—>256—>256—>1

AppShadingNetwork

- metallic_predictor

- 259—>256—>256—>256—>1

- 259 = (256 + 3) = (feature + points)

- roughness_predictor

- 259—>256—>256—>256—>1

- albedo_predictor

- 259—>256—>256—>256—>3

- outer_light 直接光

- 72—>256—>256—>256—>3

- 72 = ref_roughness = self.sph_enc(reflective, roughness)

- or self.sph_enc(normals, roughness) —> diffuse_lights

- inner_light 间接光

- 123—>256—>256—>256—>3

- 123 = (51 + 72) = (pts + ref_roughness)

- pts : 2x3x8 + 3 = 51 (L=8)

- inner_weight 遮挡概率occ_prob

- 90—>256—>256—>256—>1

- 90 = (51 + 39) = (pts + ref_)

- ref_ = self.dir_enc(reflective) = 2x3x6 + 3 = 39(L=6)

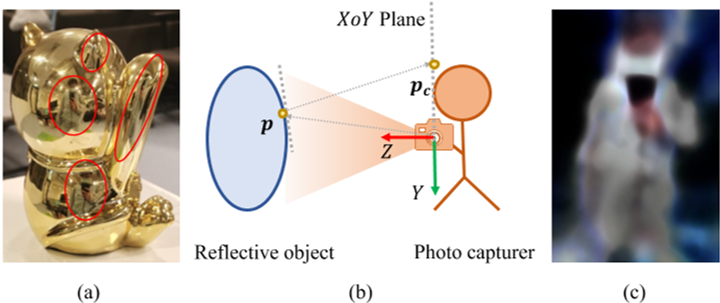

- human_light_predictor $[\alpha_{\mathrm{camera}},\mathrm{c}_{\mathrm{camera}}]=g_{\mathrm{camera}}(\mathrm{p}_{\mathrm{c}}),$

- 24—>256—>256—>256—>4

- 24 = pos_enc = IPE(mean, var, 0, 6)

- metallic_predictor

| MLP | Encoding | in_dims | out_dims | layer | neurons |

|---|---|---|---|---|---|

| SDFNetwork | VanillaFrequency(L=6) | 2x3x6+3=39 | 257 | 8 | 256 |

| SingleVarianceNetwork | None | … | … | … | … |

| NeRFNetwork | VanillaFrequency(Lp=10,Lv=4) | 2x4x10+4=84(Nerf++:4) & 2x3x4+3=27 | 4 | 8 | 256 |

| AppShadingNetwork | VanillaFrequency | … | … | … | … |

| metallic_predictor | None | 256 + 3 = 259 | 1 | 4 | 256 |

| roughness_predictor | None | 256 + 3 = 259 | 1 | 4 | 256 |

| albedo_predictor | None | 256 + 3 = 259 | 3 | 4 | 256 |

| outer_light | IDE(Ref-NeRF) | 72 | 3 | 4 | 256 |

| inner_light | VanillaFrequency+IDE | 51 + 72 = 123 | 3 | 4 | 256 |

| inner_weight | VanillaFrequency | 51 + 39 = 90 | 1 | 4 | 256 |

| human_light_predictor | IPE | 2 x 2 x 6 = 24 | 4 | 4 | 256 |

- FG_LUT:

[1,256,256,2]- from assets/bsdf_256_256.bin

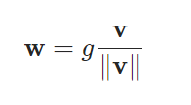

weight_norm: weight_v, weight_g

Render

_init_dataset- parse_database_name:

self.database = GlossyRealDatabase(self.cfg['database_name']) - get_database_split:self.train_ids, self.test_ids is

img_ids[1:] , img_ids[:1] - build_imgs_info(self.database, self.train_ids):根据 train_ids 加载 train_imgs_info

- train_imgs_info:{‘imgs’: images, ‘Ks’: Ks, ‘poses’: poses}

- imgs_info_to_torch(self.train_imgs_info, device = ‘cpu’):将train_imgs_info转换为torch、如果为imgs,则permute(0,3,1,2),然后to device

- 同上加载test_imgs_info并to torch and to device

_construct_ray_batch(self.train_imgs_info): 返回train_batch = ray_batch,self.train_poses = poses# imn,3,4,tbn = rn = imn * h * w, h, w{'dirs': dirs.float().reshape(rn, 3).to(device), 'rgbs': imgs.float().reshape(rn, 3).to(device),'idxs': idxs.long().reshape(rn, 1).to(device)}- pose的

[:3,:3]为正交矩阵

_shuffle_train_batch将train_batch数据打乱,每个像素点dir和rgb

- parse_database_name:

- forward

- is_train:

outputs = self.train_step(step)

- else:

- index = data[‘index’]

- outputs = self.test_step(index, step=step)

- is_train:

1 | def train_step(self, step): |

train_step

_process_ray_batch- input:ray_batch, poses

- output:rays_o, rays_d, near, far, human_poses[idxs]

- 将原世界坐标系下o点转换到手动设置的世界坐标系w’下,并将ray_batch中的dirs转换到手动设置的世界坐标系w’下

- near_far_from_sphere:

- get near and far through rays_o, rays_d

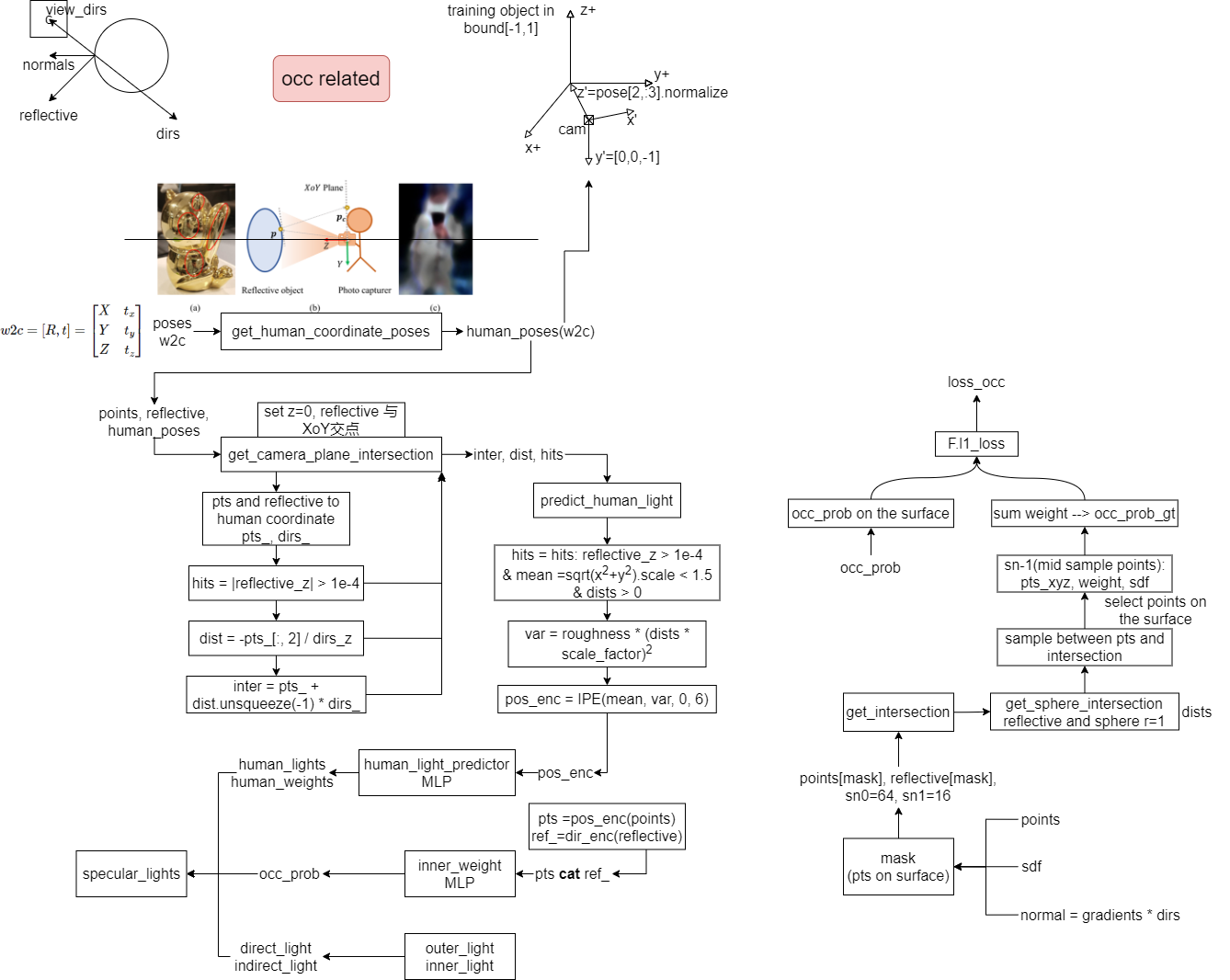

- get_human_coordinate_poses

- 根据pose得到human_poses(w2c):用于判断从相机发出的光线在物体上反射是否击中human

- human_poses: 在相机原点处(相机原点不动),z轴为原相机坐标系z轴在与w’的xoy平面平行的xoy’平面的投影单位向量,y轴为w’下z-方向的单位向量

- get_anneal_val(step)

- if self.cfg[‘anneal_end’] < 0 :

1 - else :

np.min([1.0, step / self.cfg['anneal_end']])

- if self.cfg[‘anneal_end’] < 0 :

- render(rays_o, rays_d, near, far, human_poses, perturb_overwrite=-1, cos_anneal_ratio=0.0, is_train=True, step=None)

- input: rays_o, rays_d, near, far, human_poses, -1, self.get_anneal_val(step), is_train=True, step=step

- output: ret = outputs

- sample_ray(rays_o, rays_d, near, far, perturb)

- 同neus,上采样+cat_z_vals堆叠z_vals

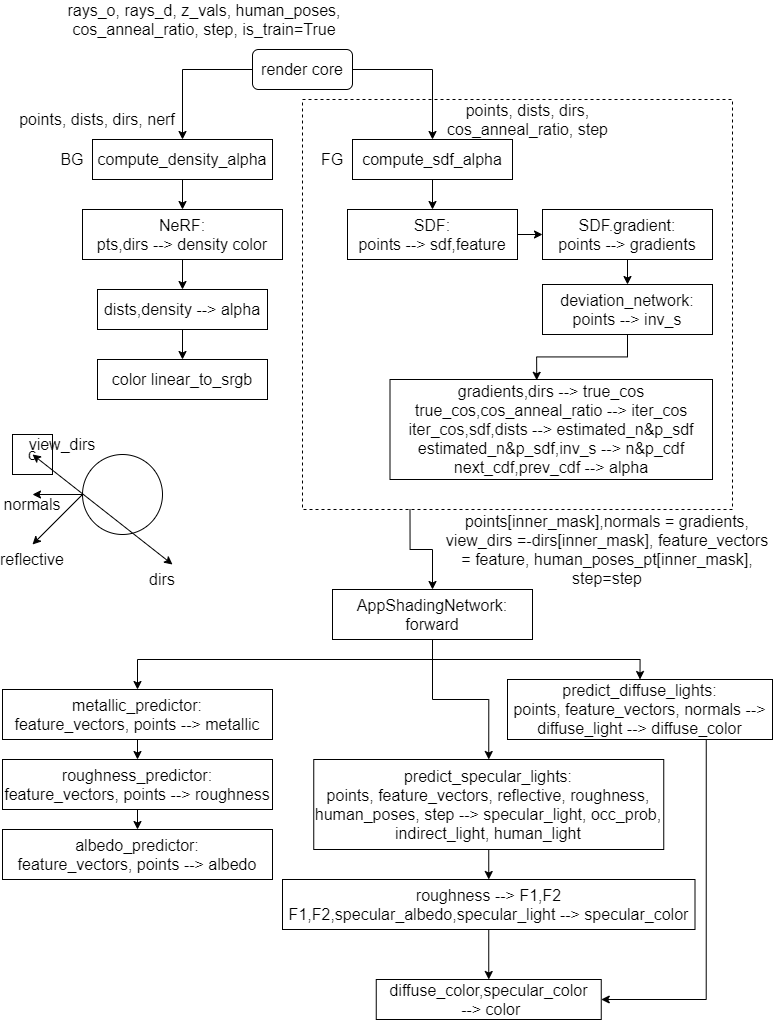

- render_core

- input: rays_o, rays_d, z_vals, human_poses, cos_anneal_ratio=cos_anneal_ratio, step=step, is_train=is_train

- output: outputs

- get dists , mid_z_vals , points , inner_mask , outer_mask ,dirs , human_poses_pt

- if torch.sum(outer_mask) > 0: 背景使用NeRF网络得到颜色和不透明度

alpha[outer_mask], sampled_color[outer_mask] = self.compute_density_alpha(points[outer_mask], dists[outer_mask], -dirs[outer_mask], self.outer_nerf)

- if torch.sum(inner_mask) > 0: 前景使用SDF和Color网络得到sdf和颜色、碰撞信息 , else:

gradient_error = torch.zeros(1)alpha[inner_mask], gradients, feature_vector, inv_s, sdf = self.compute_sdf_alpha(points[inner_mask], dists[inner_mask], dirs[inner_mask], cos_anneal_ratio, step)sampled_color[inner_mask], occ_info = self.color_network(points[inner_mask], gradients, -dirs[inner_mask], feature_vector, human_poses_pt[inner_mask], step=step)- sampled_color : 采样点颜色

- occ_info : dict {‘reflective’: reflective, ‘occ_prob’: occ_prob,}

gradient_error = (torch.linalg.norm(gradients, ord=2, dim=-1) - 1.0) ** 2梯度损失

- alpha — > weight

- sampled_color, weight —> color

- outputs = {‘ray_rgb’: color, ‘gradient_error’: gradient_error,}

if torch.sum(inner_mask) > 0: outputs['std'] = torch.mean(1 / inv_s)|else: outputs['std'] = torch.zeros(1)- if step < 1000:

- mask = torch.norm(points, dim=-1) < 1.2

outputs['sdf_pts'] = points[mask]outputs['sdf_vals'] = self.sdf_network.sdf(points[mask])[..., 0]

if self.cfg['apply_occ_loss']:- if torch.sum(inner_mask) > 0:

outputs['loss_occ'] = self.compute_occ_loss(occ_info, points[inner_mask], sdf, gradients, dirs[inner_mask], step)- compute_occ_loss 碰撞损失

- else:

outputs['loss_occ'] = torch.zeros(1)

- if torch.sum(inner_mask) > 0:

- if not is_train:

outputs.update(self.compute_validation_info(z_vals, rays_o, rays_d, weights, human_poses, step)) - return outputs

- rgb_loss:

outputs['loss_rgb'] = self.compute_rgb_loss(outputs['ray_rgb'], train_ray_batch['rgbs'])

1 | cfg['rgb_loss'] = 'charbonier' |

render core : MLP output

Occ related: Occ prob and loss_occ

loss_occ

1 | inter_dist, inter_prob, inter_sdf = get_intersection(self.sdf_inter_fun, self.deviation_network, points[mask], reflective[mask], sn0=64, sn1=16) # pn,sn-1 |

1 | def get_intersection(sdf_fun, inv_fun, pts, dirs, sn0=128, sn1=9): |

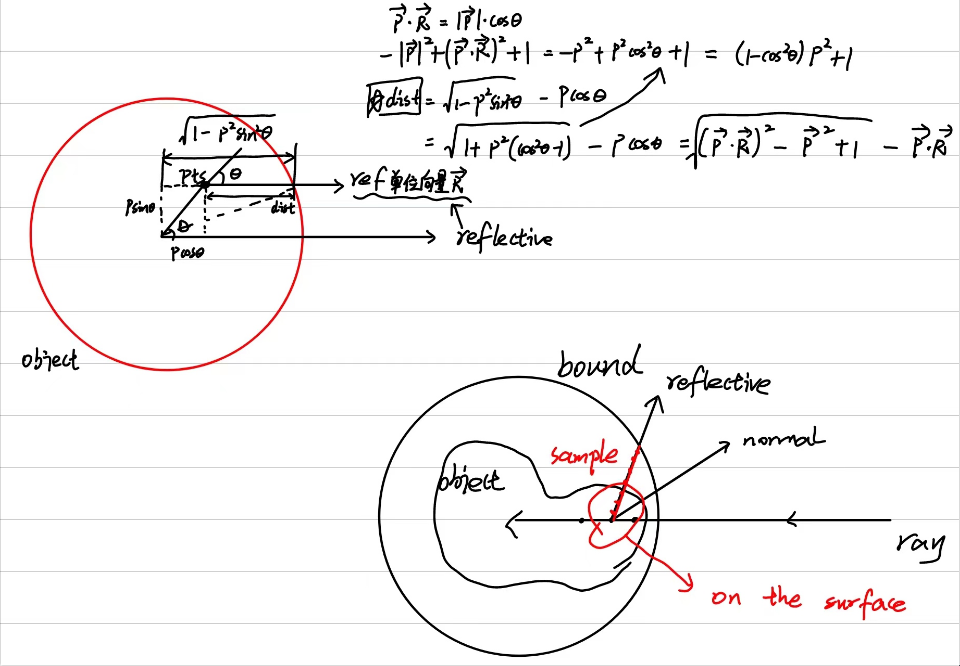

反射光线与单位球交点,到pts的距离dist

1 | def get_sphere_intersection(pts, dirs): |

从pts采样点,长度dist,方向为reflective,均匀采样sn个点

- 获取sn个采样点的sn-1个中点的坐标、sdf和invs

- 选取其中在物体表面上的点:

surface_mask = (cos_val < 0) - 计算这些点的sdf和权重

- 表面采样点的权重之和即为occ_prob_gt

- occ_prob_gt与occ_prob进行求L1损失即为loss_occ

1 | def get_weights(sdf_fun, inv_fun, z_vals, origins, dirs): |

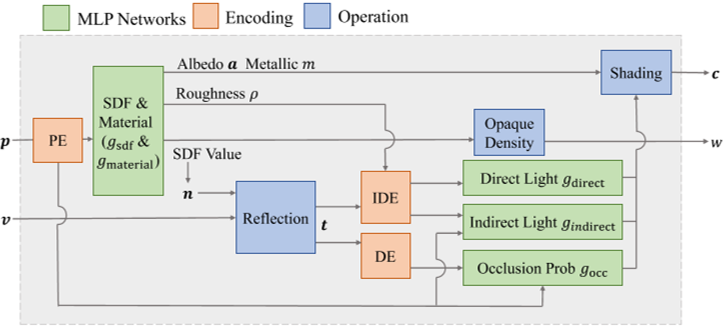

NeROMaterialRenderer

MLP

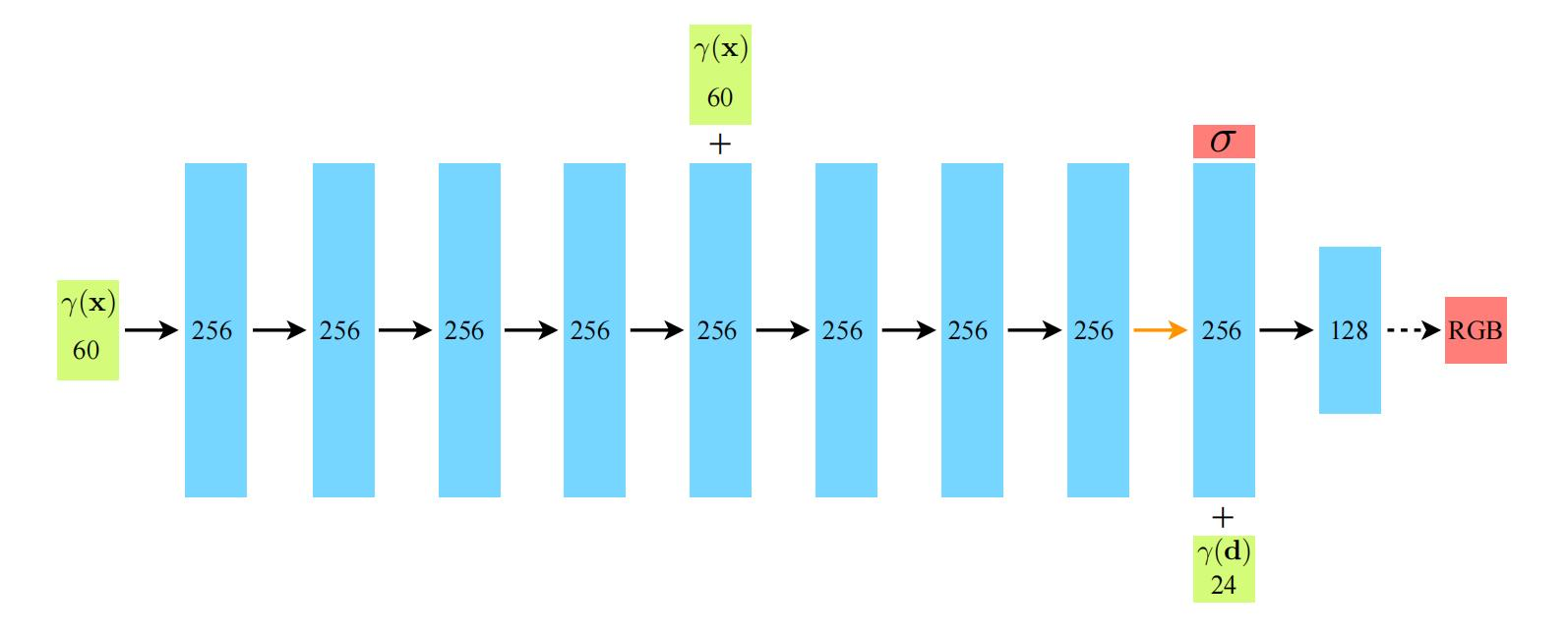

shader_network = MCShadingNetwork

- feats_network = MaterialFeatsNetwork

- 51—>256—>256—>256—>256+51—>256—>256—>256—>256

- 51: pts = get_embedder(8,3)(x) = 8 x 2 x 3 + 3 = 51

- metallic_predictor

- 259—>256—>256—>256—>1

- 259 = 256(feature) + 3(pts)

- roughness_predictor

- 259—>256—>256—>256—>1

- albedo_predictor

- 259—>256—>256—>256—>3

- outer_light

- 144—>256—>256—>256—>3

- 144 = 72 x 2 =

torch.cat([outer_enc, sphere_pts], -1)- outer_enc = self.sph_enc(directions, 0) = 72

- sphere_pts = self.sph_enc(sphere_pts, 0) = 72

- human_light

- 24—>256—>256—>256—>4

- 24:

pos_enc = IPE(mean, var, 0, 6) # 2*2*6

- inner_light

- 123(51 + 72)—>256—>256—>256—>3

- 123 =

torch.cat([pos_enc, dir_enc], -1)- pos_enc = self.pos_enc(points) = 51

- self.pos_enc = get_embedder(8, 3)

- dir_enc = self.sph_enc(reflections, 0) = 72

- pos_enc = self.pos_enc(points) = 51

| MLP | Encoding | in_dims | out_dims | layer | neurons |

|---|---|---|---|---|---|

| light_pts | single parameter | … | … | … | … |

| MCShadingNetwork | … | … | … | … | … |

| feats_network | VanillaFrequency | 8 x 2 x 3 + 3 = 51 | 256 | 8 | 256 |

| metallic_predictor | None | 256 + 3 = 259 | 1 | 4 | 256 |

| roughness_predictor | None | 256 + 3 = 259 | 1 | 4 | 256 |

| albedo_predictor | None | 256 + 3 = 259 | 3 | 4 | 256 |

| outer_light | IDE | 72 + 72 = 144 | 3 | 4 | 256 |

| human_light | IPE | 2 x 2 x 6 = 24 | 4 | 4 | 256 |

| inner_light | VanillaFrequency+IDE | 51 + 72 = 123 | 3 | 4 | 256 |

Render

_init_geometry- self.mesh = open3d.io.read_triangle_mesh(self.cfg[‘mesh’]) 读取 Stage1得到的mesh

- self.ray_tracer = raytracing.RayTracer(np.asarray(self.mesh.vertices), np.asarray(self.mesh.triangles)) 获得raytracer,用于根据rays_o和rays_d得到intersections, face_normals, depth

_init_dataset- parse_database_name 返回

self.database = GlossyRealDatabase(self.cfg['database_name']) - get_database_split :

self.train_ids, self.test_ids =img_ids[1:], img_ids[:1] - if is_train:

- build_imgs_info : train and test

- return {‘imgs’: images, ‘Ks’: Ks, ‘poses’: poses}

- imgs_info_to_torch : train and test

_construct_ray_batch(train_imgs_info)- train_imgs_info to ray_batch

- tbn = imn

_shuffle_train_batch

- build_imgs_info : train and test

- parse_database_name 返回

1 | def _init_dataset(self, is_train): |

_init_shaderself.cfg['shader_cfg']['is_real'] = self.cfg['database_name'].startswith('real')self.shader_network = MCShadingNetwork(self.cfg['shader_cfg'], lambda o,d: self.trace(o,d))— MLP Network

- forward

- if is_train: self.train_step(step)

Relight

1 | python relight.py --blender <path-to-your-blender> \ |

1 | import argparse |

运行python relight.py后,子进程在cmd中运行以下命令:

1 | F:\Blender\blender.exe --background # 无UI界面渲染,在后台运行 |

blender中运行的python脚本

blender_backend/relight_backend.py:

import bpy

blender_backend/blender_utils.py: