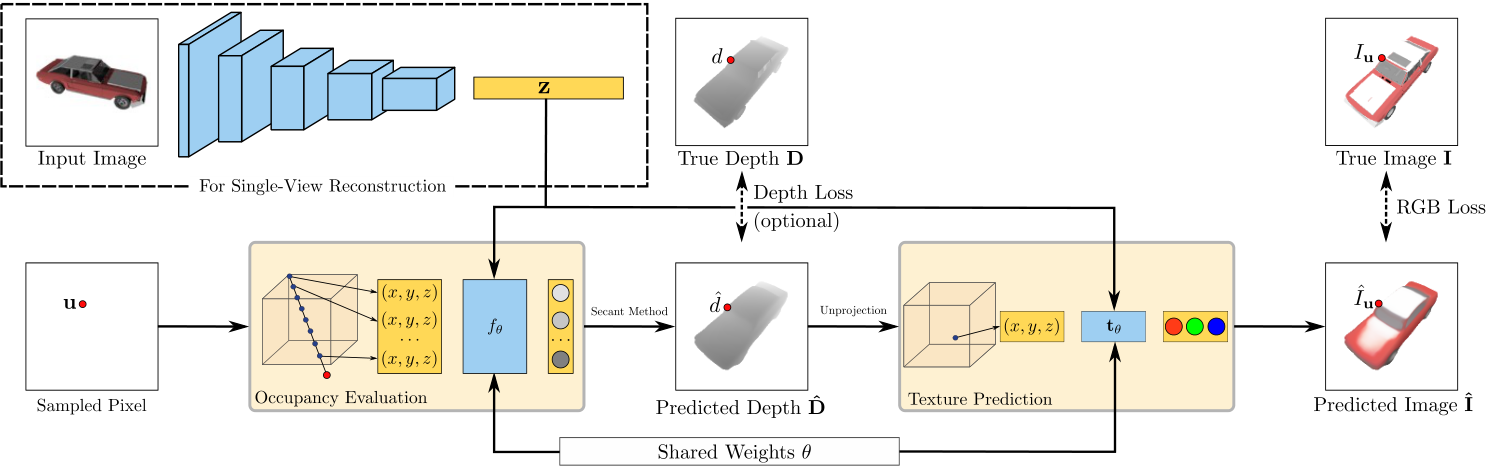

NeRF-based重建方法之于前作监督的重建(新视图生成)方式,如MVS需要真实的深度图作监督,之前的包括生成式的方法需要三维模型的信息(PointCloud、Voxel、Mesh)作监督,NeRF-based方法构建了一种自监督的重建方式,从图像中重建物体只需要用图像作监督

NeRF将三维空间中所有点,通过MLP预测出对应的密度/SDF,是一种连续的方法(理论上,实际上由于计算机精度还是离散的)。至少在3D上不会由于离散方法(voxel),而出现很大的锯齿/aliasing

NeRF-based self-supervised 3D Reconstruction

- image and pose(COLMAP)

- NeRF(NeuS) or 3DGS(SuGaR)

- 损失函数(对比像素颜色、深度、法向量、SDF梯度累积

<Eikonal term>Eikonal Equation and SDF - Lin’s site)

- 损失函数(对比像素颜色、深度、法向量、SDF梯度累积

- PointCloud后处理,根据不同用途如3D打印、有限元仿真分析、游戏assets,有许多格式mesh/FEMode/AMs

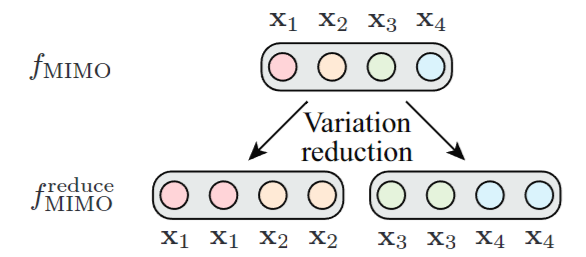

3D Representation Methods: A Survey | PDF

A Review on Deep Learning Approaches for 3D Data Representations in Retrieval and Classifications

Tools

数据集处理

- NeuS/preprocess_custom_data at main · Totoro97/NeuS

- Neuralangelo

相机位姿360°视频渲染:

https://github.com/hbb1/2d-gaussian-splatting/blob/main/render.py 可以参考这里的generate_path 把训练相机丢进去就可以fit出一个360路径进行渲染

SuperSplat 是一个免费的开源工具,用于检查和编辑 3D 高斯 Splat。它基于 Web 技术构建并在浏览器中运行,因此无需下载或安装任何内容。

https://playcanvas.com/supersplat/editor

playcanvas/supersplat: 3D Gaussian Splat Editor

从sdf查询中提取表面

SarahWeiii/diso: Differentiable Iso-Surface Extraction Package (DISO)

对比DMTet FlexiCubes DiffMC DiffDMC

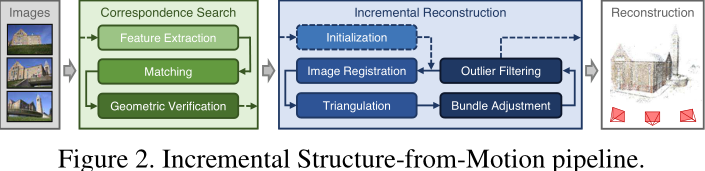

COLMAP

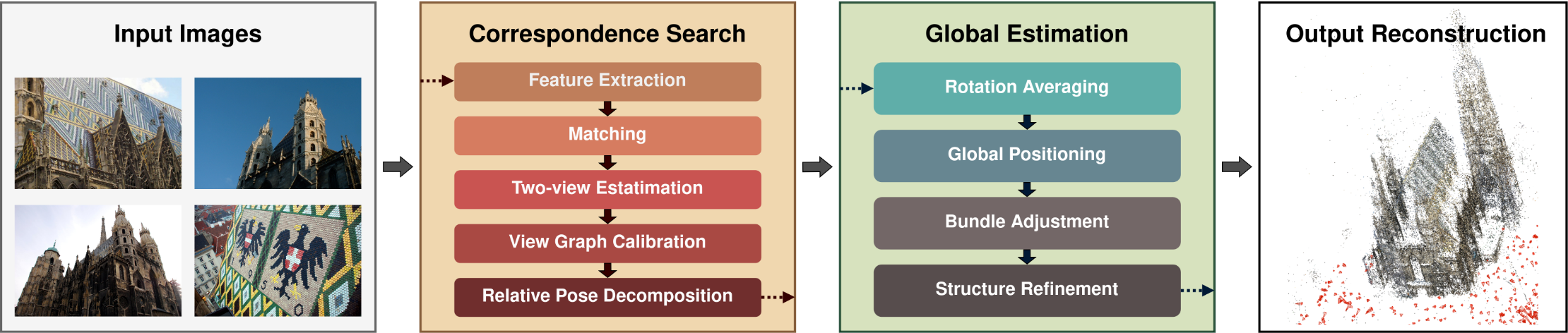

CVPR 2017 Tutorial - Large-scale 3D Modeling from Crowdsourced Data 大尺度3D modeling

3D modeling pipeline:demuc.de/tutorials/cvpr2017/introduction1.pdf

- Data Association,找到图片之间的相关性

- Descriptors:好的图片特征应该有以下特性:Repeatability, Saliency, Compactness and efficiency, Locality,也存在一些挑战——需要在different scales (sizes) and different orientations 甚至是 不同光照条件下进行探测特征

- Global image descriptor,

- Color histograms 颜色直方图来作为描述符

- GIST-feature, eg: Several frequency bands and orientations for each image location. Tiling of the image, for example 4x4, and at different resolutions. Color histogram

- Local image descriptor

- SIFT-detector(Scale and image-plane-rotation invariant feature descriptor)

- DSP-SIFT

- BRIEF

- ORB: Fast Corner Detector

- Global image descriptor,

- Descriptors:好的图片特征应该有以下特性:Repeatability, Saliency, Compactness and efficiency, Locality,也存在一些挑战——需要在different scales (sizes) and different orientations 甚至是 不同光照条件下进行探测特征

- 3D points & camera pose

- Dense 3D

- Model generation

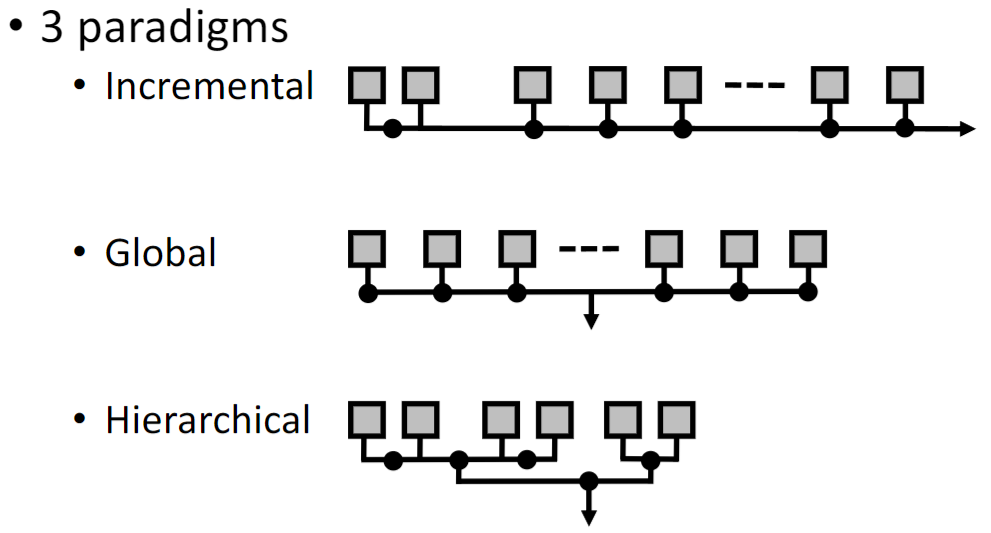

demuc.de/tutorials/cvpr2017/sparse-modeling.pdf 增量式的全部流程解析

几大挑战:

- Watermarks, timestamps, frames (WTFs)

- Calibration:Focal length unknown、Image distortion

- Scale ambiguity 无法得知场景具体的尺寸

- Use GPS EXIF tags for geo-registration

- Use semantics to infer scale 基于语义和先验来进行赋予尺寸

- Dynamic objects

- Repetitive Structure (对称结构)

- Illumination Change

- 视图选择(初始化视图、next best view)

COLMAP

colmap tutorial

Document:COLMAP — COLMAP 3.11.0.dev0 documentation

windows pre-releaseReleases · colmap/colmap

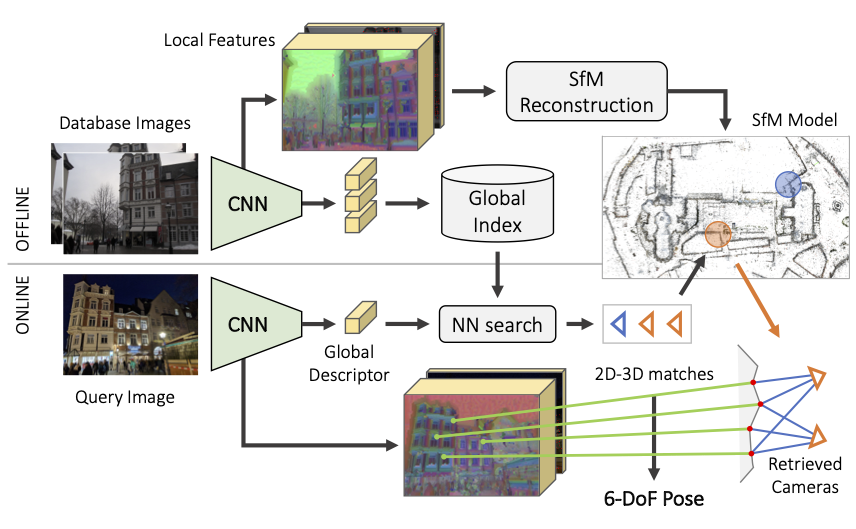

HLOC

cvg/Hierarchical-Localization: Visual localization made easy with hloc

From Coarse to Fine: Robust Hierarchical Localization at Large Scale

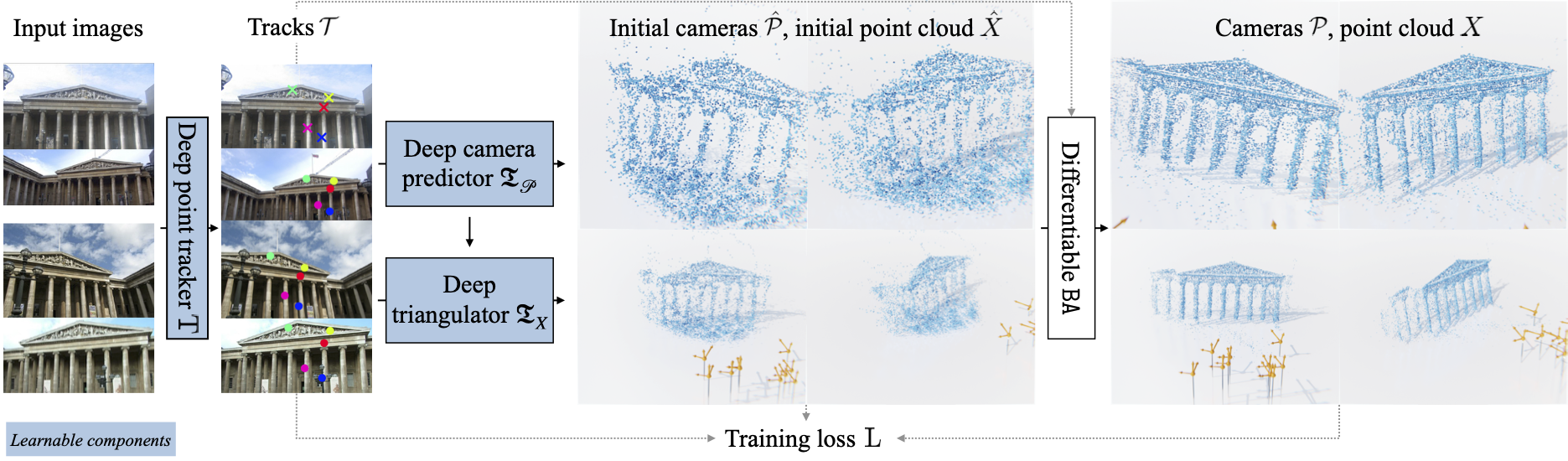

VGGSfM

VGGSfM: Visual Geometry Grounded Deep Structure From Motion

We are highly inspired by colmap, pycolmap, posediffusion, cotracker, and kornia.

GLOMAP

colmap/glomap: GLOMAP - Global Structured-from-Motion Revisited

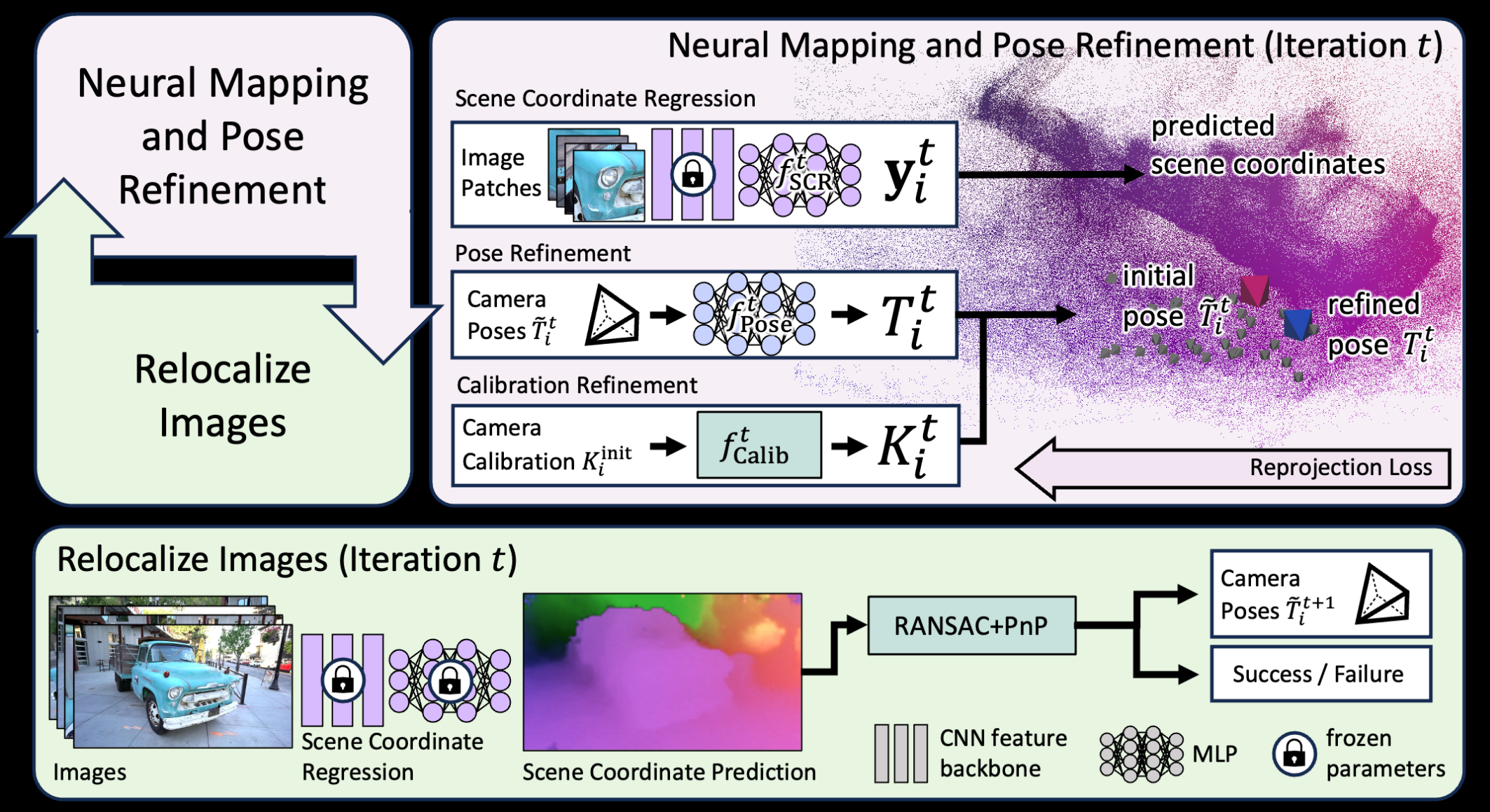

ACE Zero

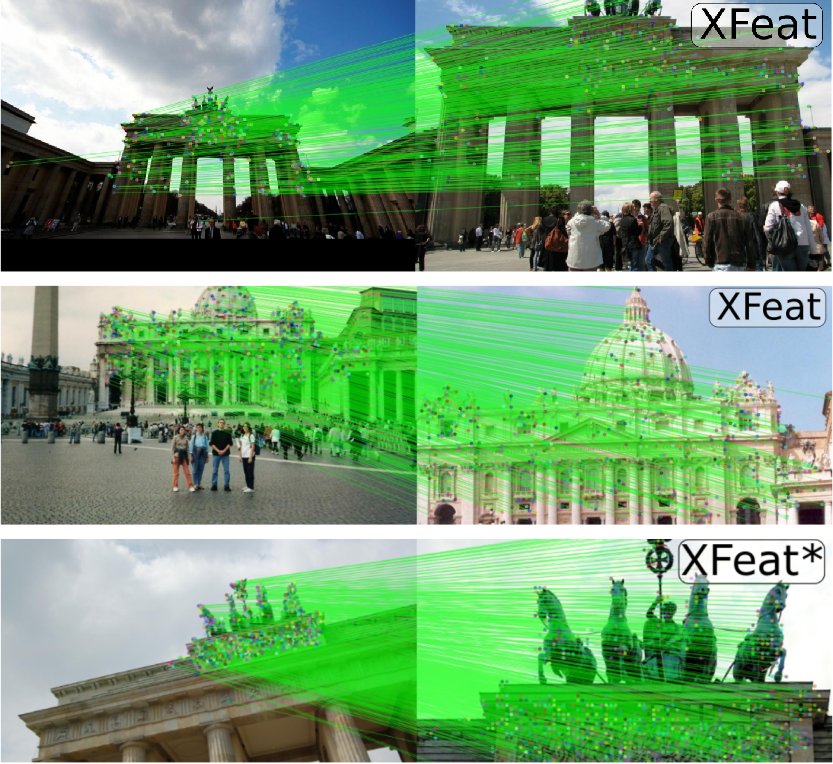

XFeat

快速特征提取+匹配(轻量)

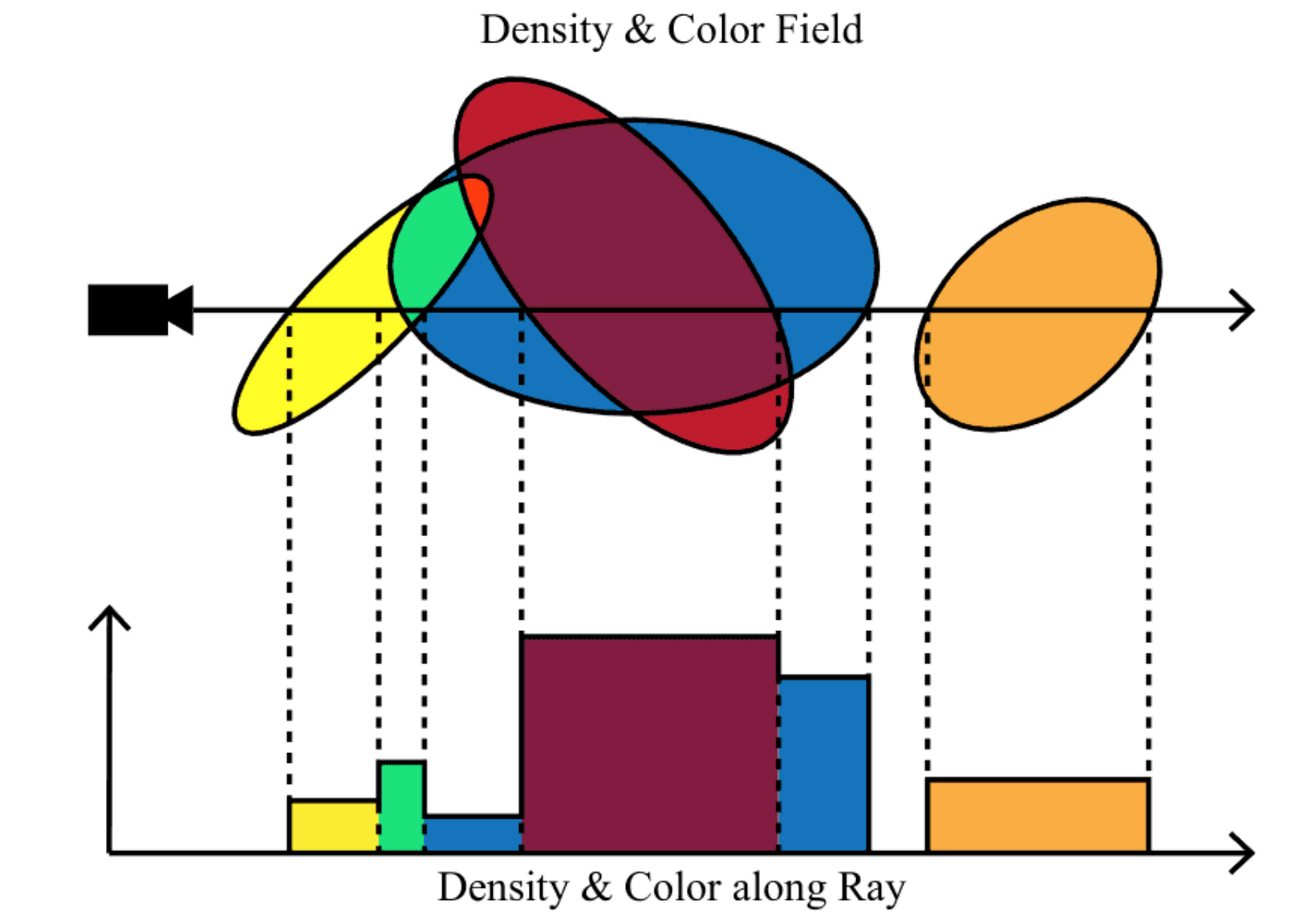

NeRF (VR+Field)

1 | **形式中立+定义纯粹** NeRF阵营图 |

NeRF-review | NeRF-Studio (code)

Baseline

Important Papers

| Year | Note | Overview | Description |

|---|---|---|---|

| 2020 | DVR |  |

|

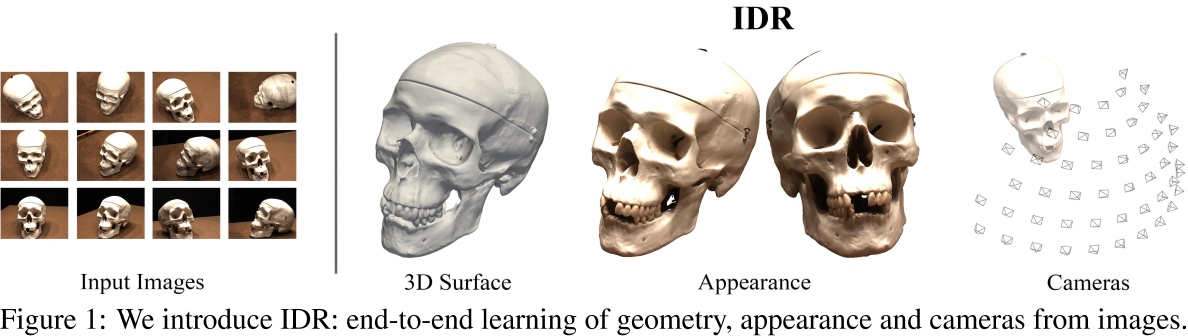

| 2020 | IDR |  |

|

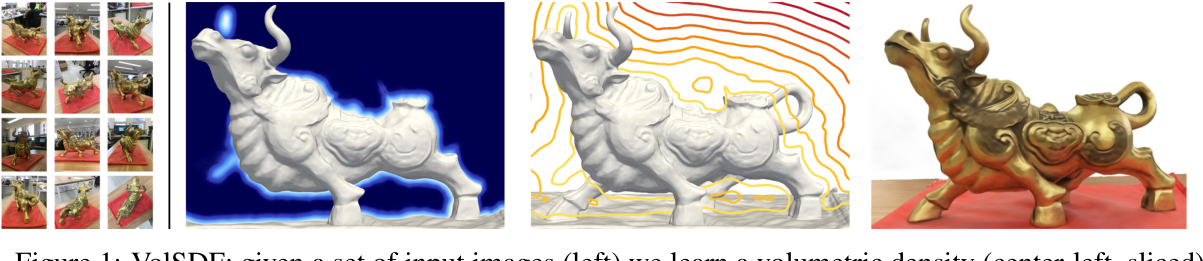

| 2021 | VolSDF |  |

|

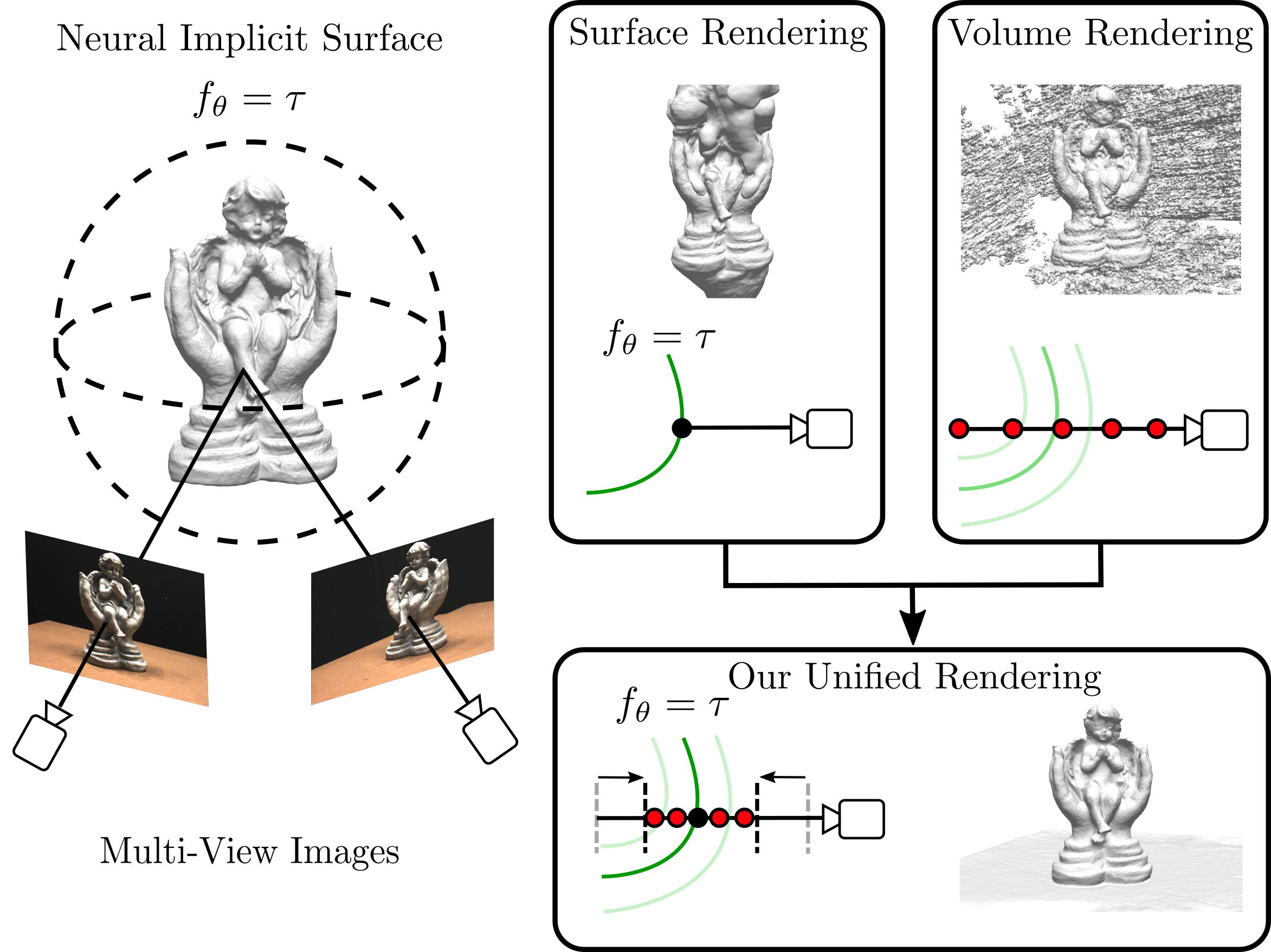

| 2021 | UNISURF |  |

|

| 2021 | NeuS |  |

|

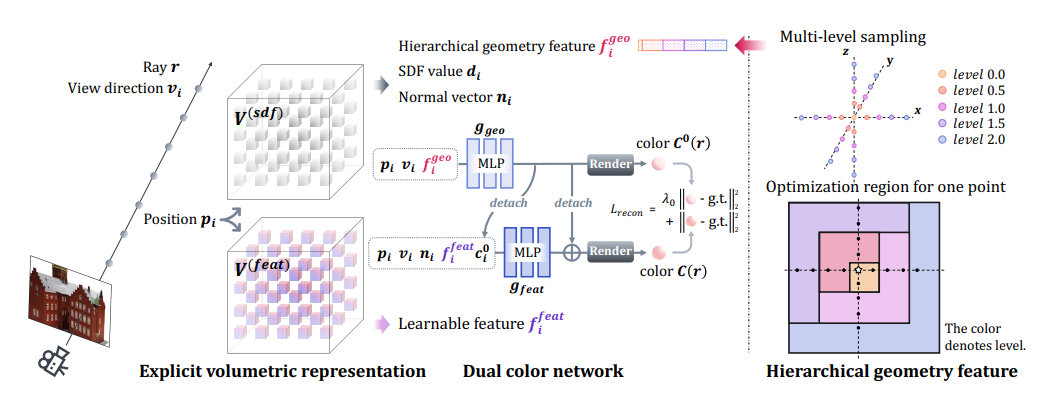

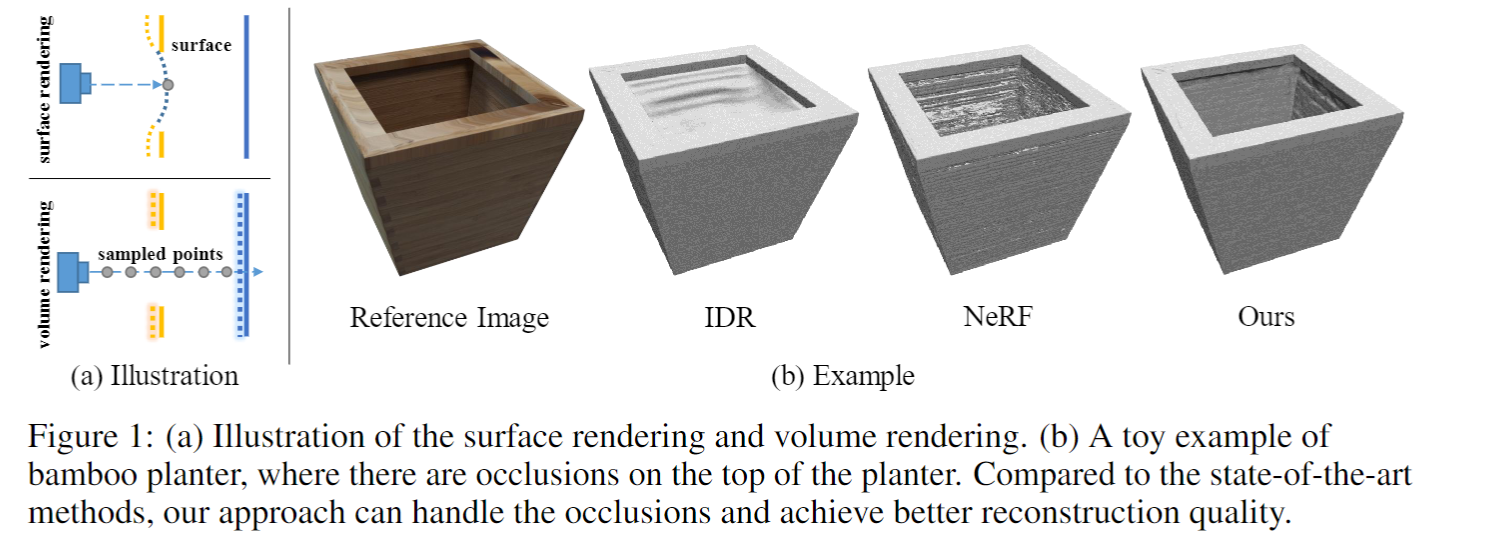

| 2022 | HF-NeuS | ||

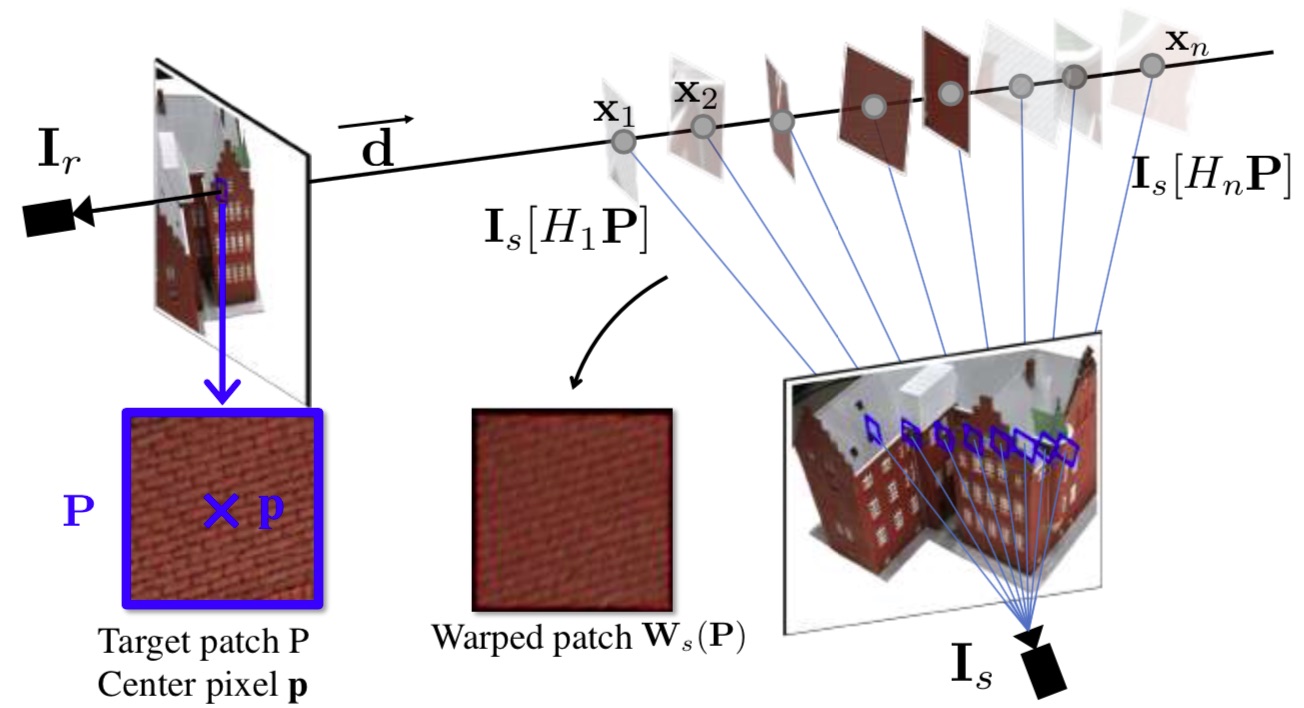

| 2022 | NeuralWarp |  |

a direct photo-consistency term |

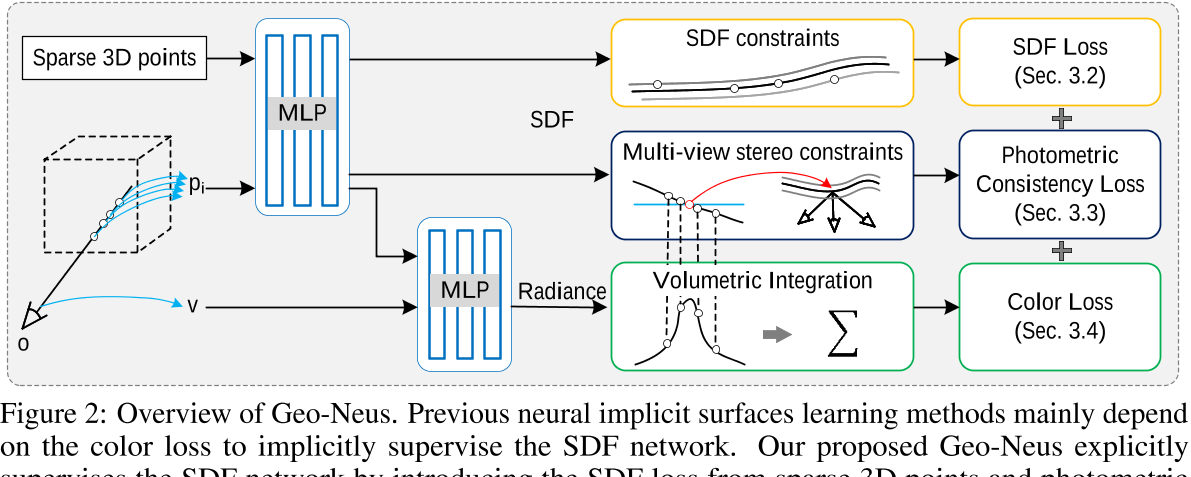

| 2022 | Geo-Neus |  |

验证了颜色渲染偏差,基于SFM稀疏点云的几何监督, 基于光度一致性监督进行几何一致性监督 |

| 2022 | Instant-NSR |  |

|

| 2022 | Neus-Instant-nsr-pl | No Paper!!! Just Code | |

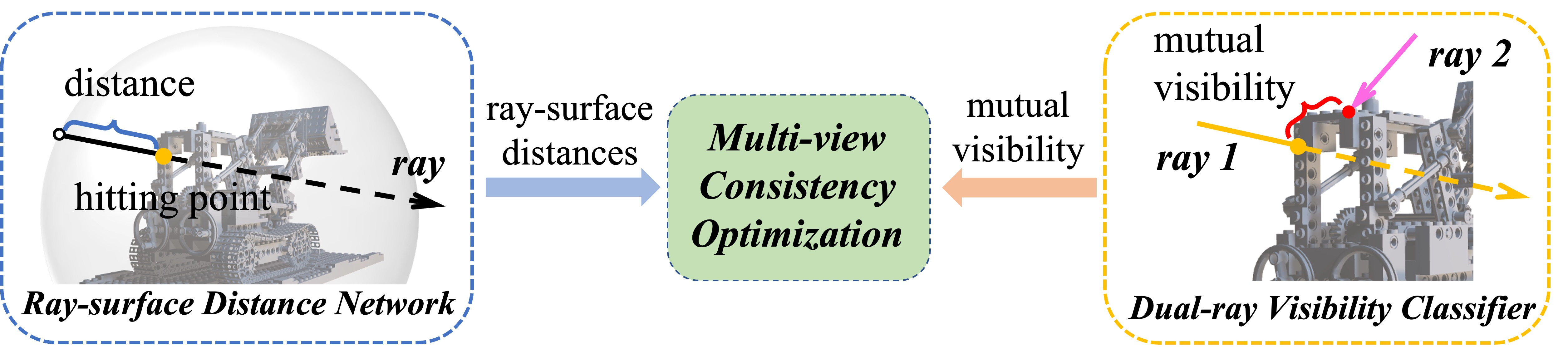

| 2023 | RayDF |  |

|

| 2023 | NISR |  |

|

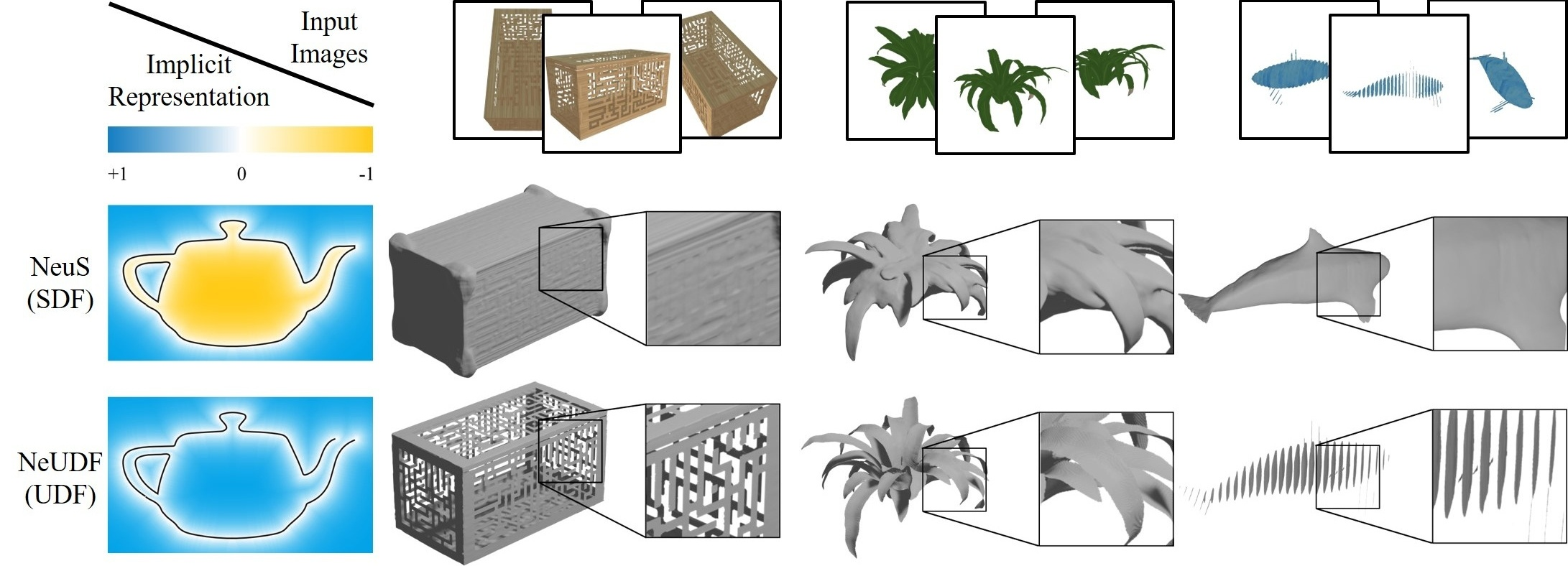

| 2023 | NeUDF |  |

|

| 2023 | LoD-NeuS |  |

|

| 2023 | D-NeuS |  |

|

| 2023 | Color-NeuS |  |

|

| 2023 | BakedSDF |  |

|

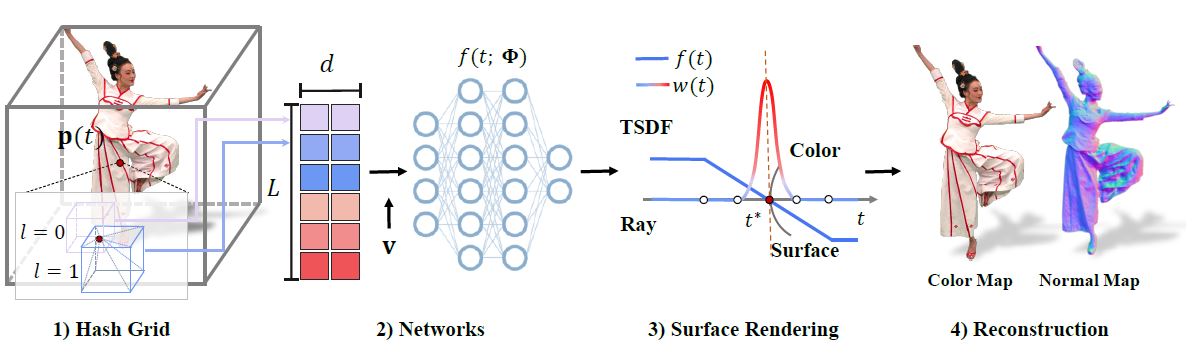

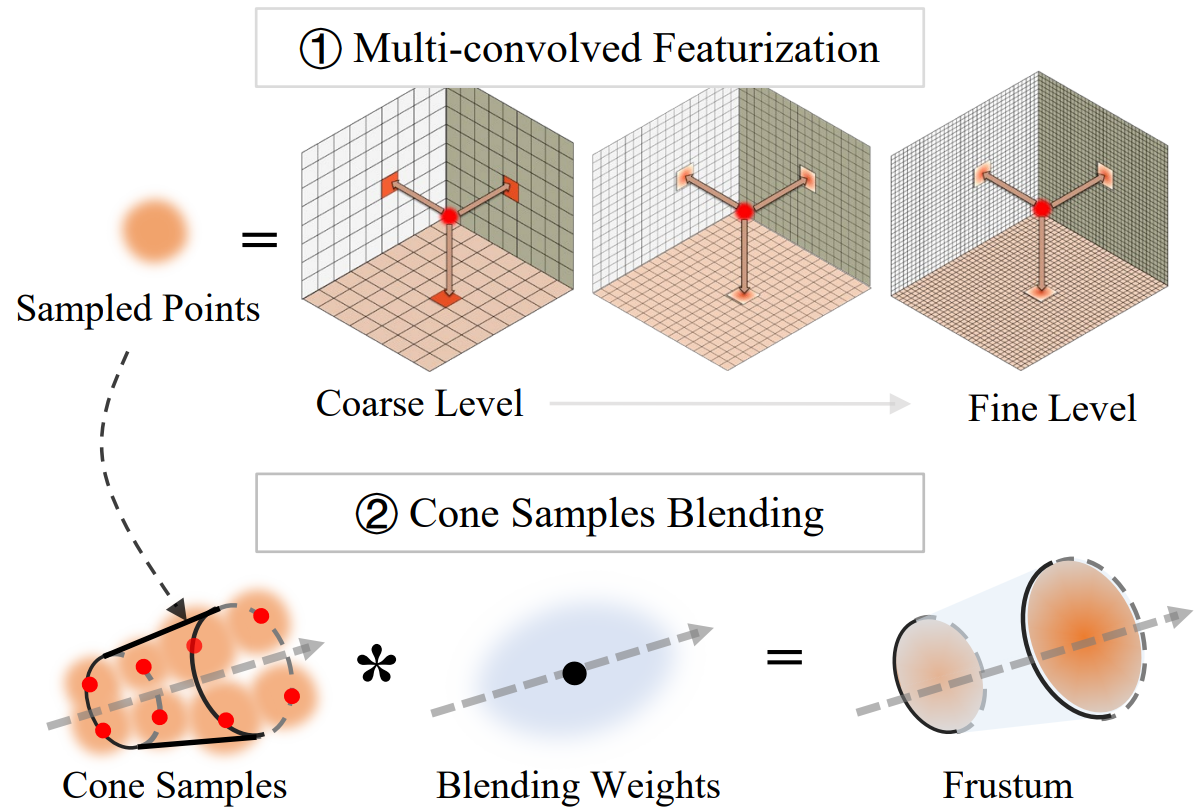

| 2023 | Neuralangelo |  |

|

| 2023 | NeuS2 |  |

|

| 2023 | PermutoSDF |  |

|

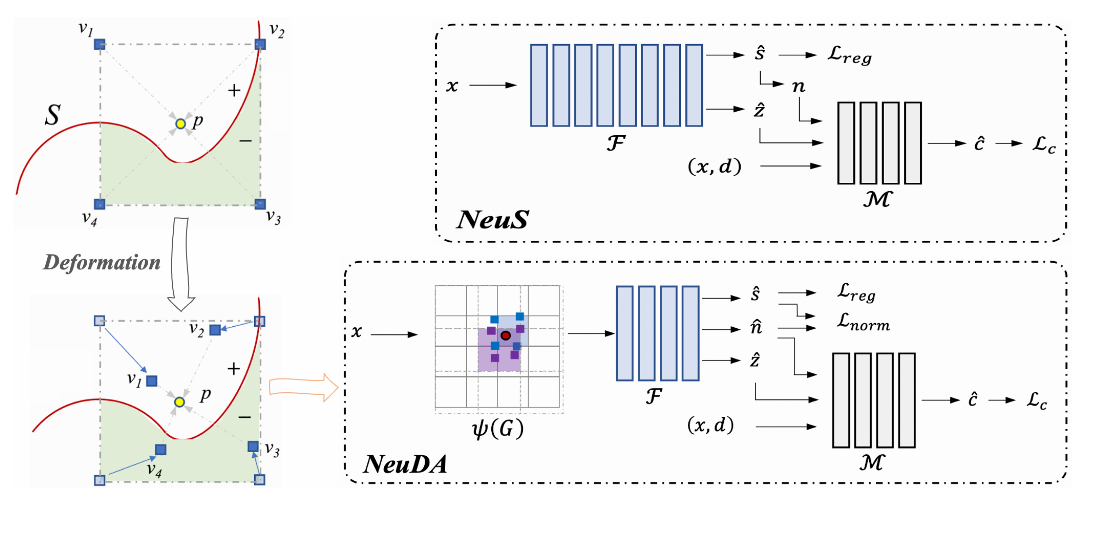

| 2023 | NeuDA |  |

|

| 2023 | Ref-NeuS | ||

| 2023 | ShadowNeuS | ||

| 2023 | NoPose-NeuS | ||

| 2022 | MonoSDF | ||

| 2022 | RegNeRF | ||

| 2023 | ashawkey/nerf2mesh: [ICCV2023] Delicate Textured Mesh Recovery from NeRF via Adaptive Surface Refinement |  |

|

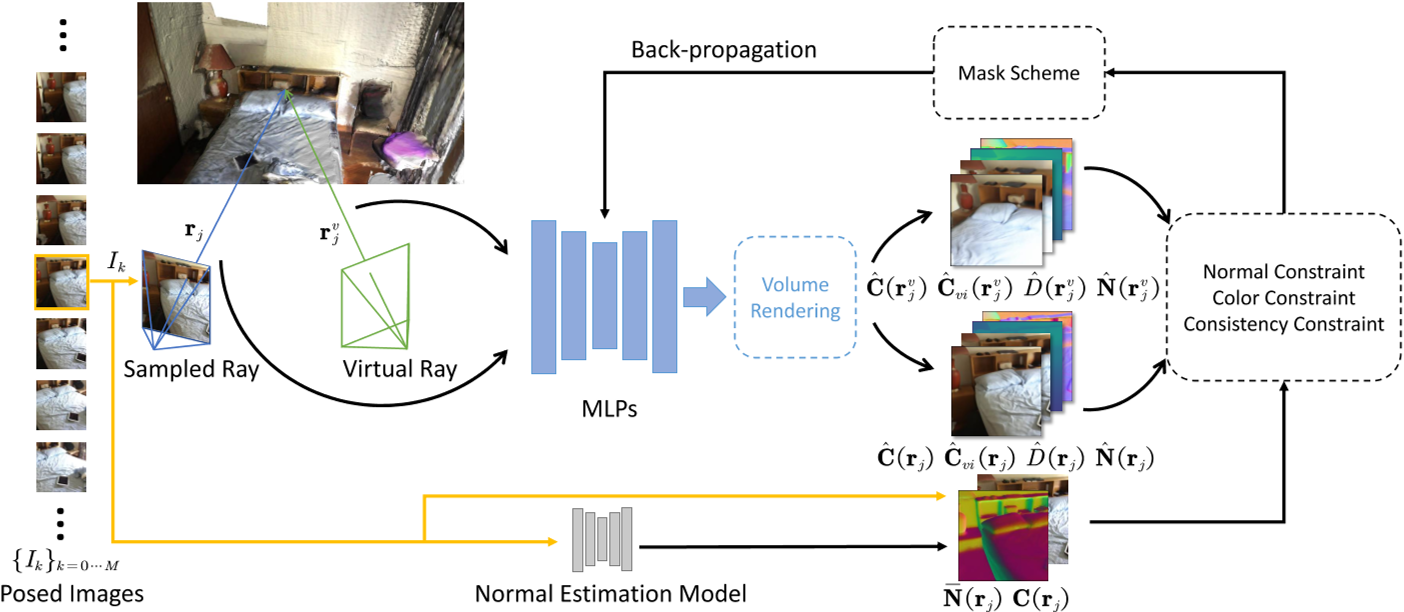

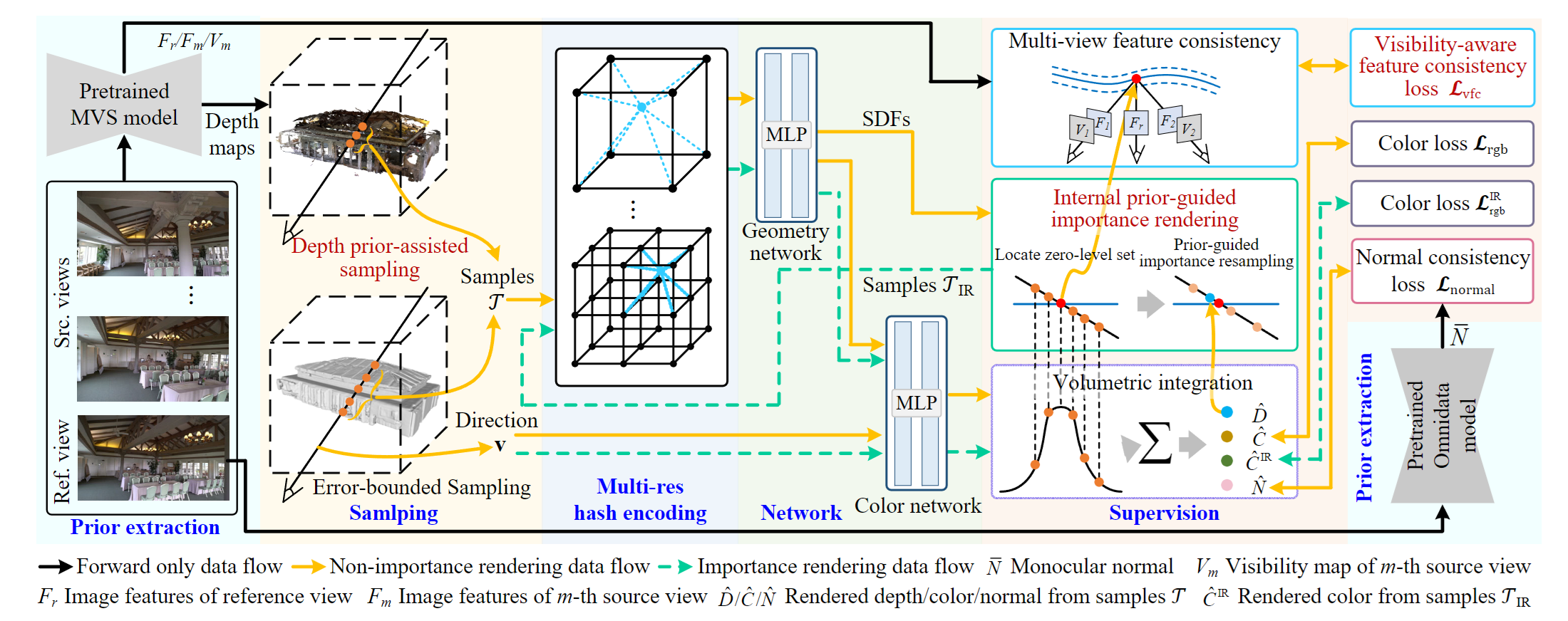

| 2024 | PSDF: Prior-Driven Neural Implicit Surface Learning for Multi-view ReconstructionPDF |  |

法向量先验+多视图一致,使用深度图引导采样 |

ActiveNeRF

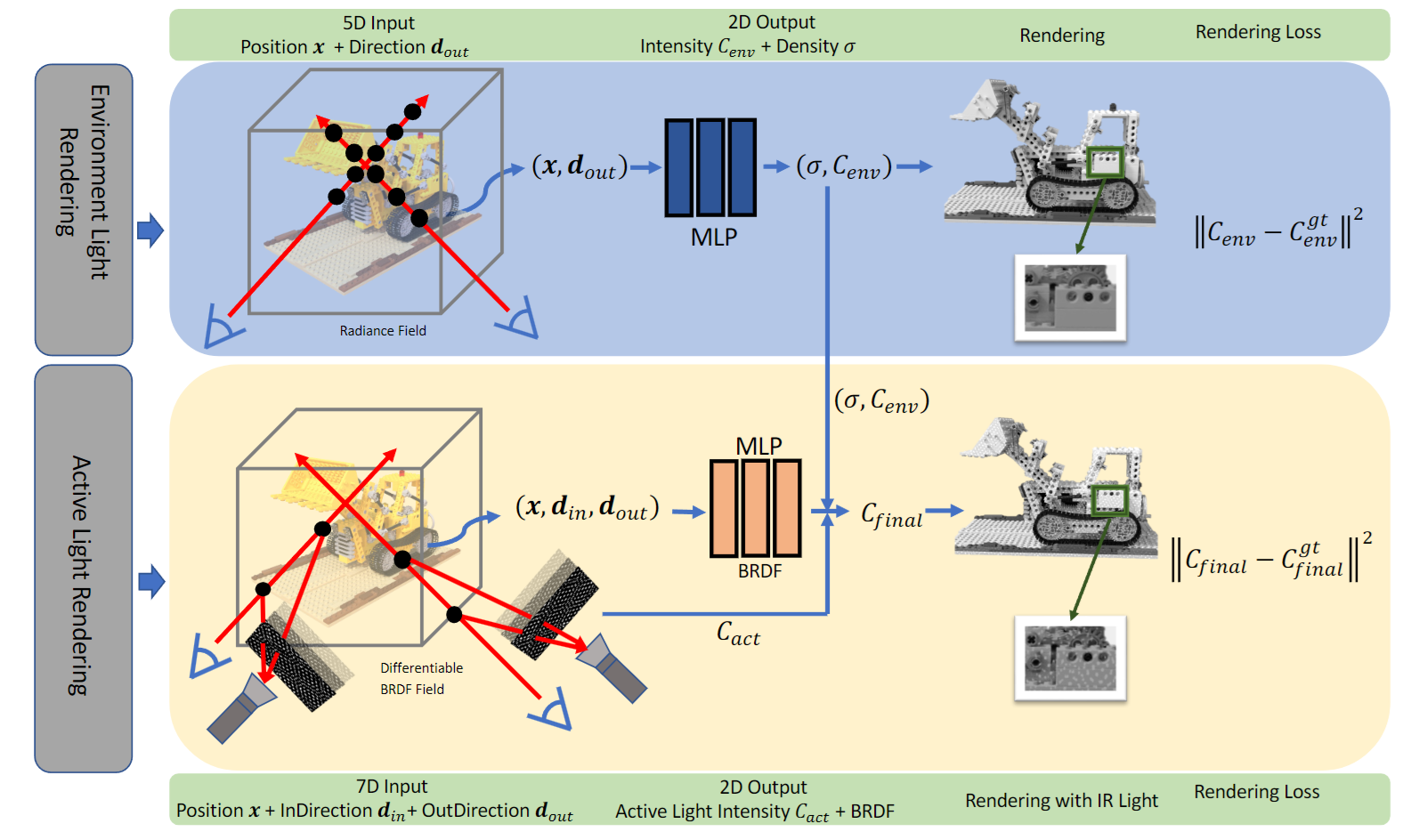

ActiveNeRF: Learning Accurate 3D Geometry by Active Pattern Projection | PDF

结合了结构光的思路,将图案投影到空间的场景/物体中来提高 NeRF 的几何质量

VF-NeRF

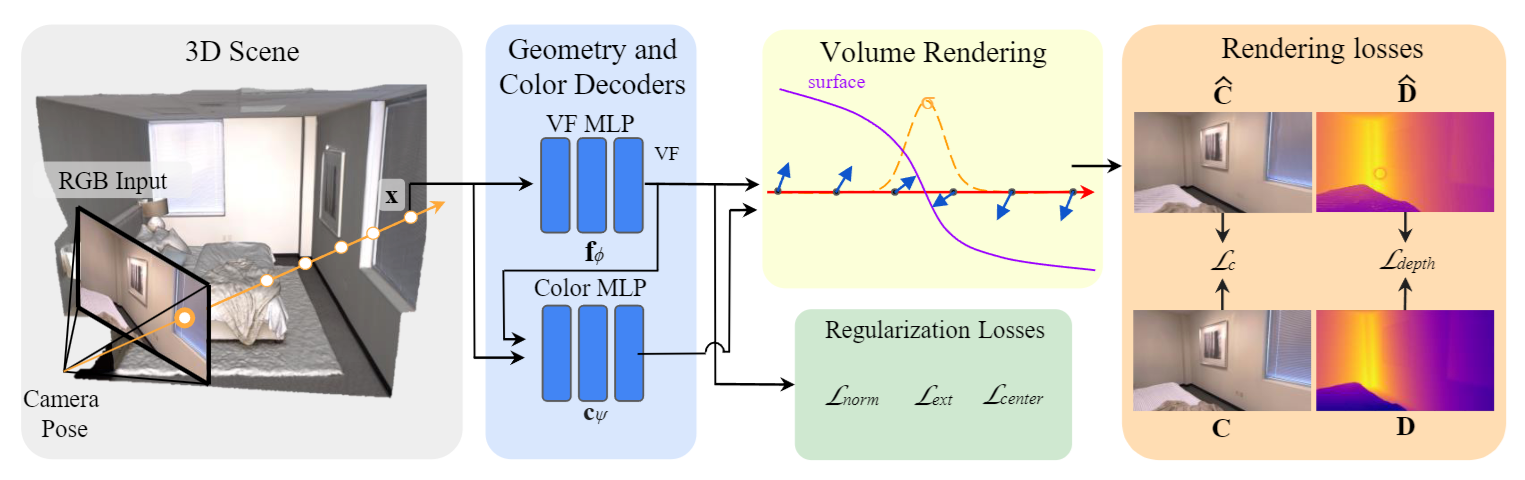

VF-NeRF: Learning Neural Vector Fields for Indoor Scene Reconstruction | PDF

针对室内场景的纹理较弱的区域

Neural Vector Fields 表示空间中一点到最近表面点的向量

VF 由指向最近表面点的单位矢量定义。因此,它在表面处翻转方向并等于显式表面法线。除了这种翻转之外,VF 沿平面保持不变,并在表示平面时提供强大的归纳偏差。

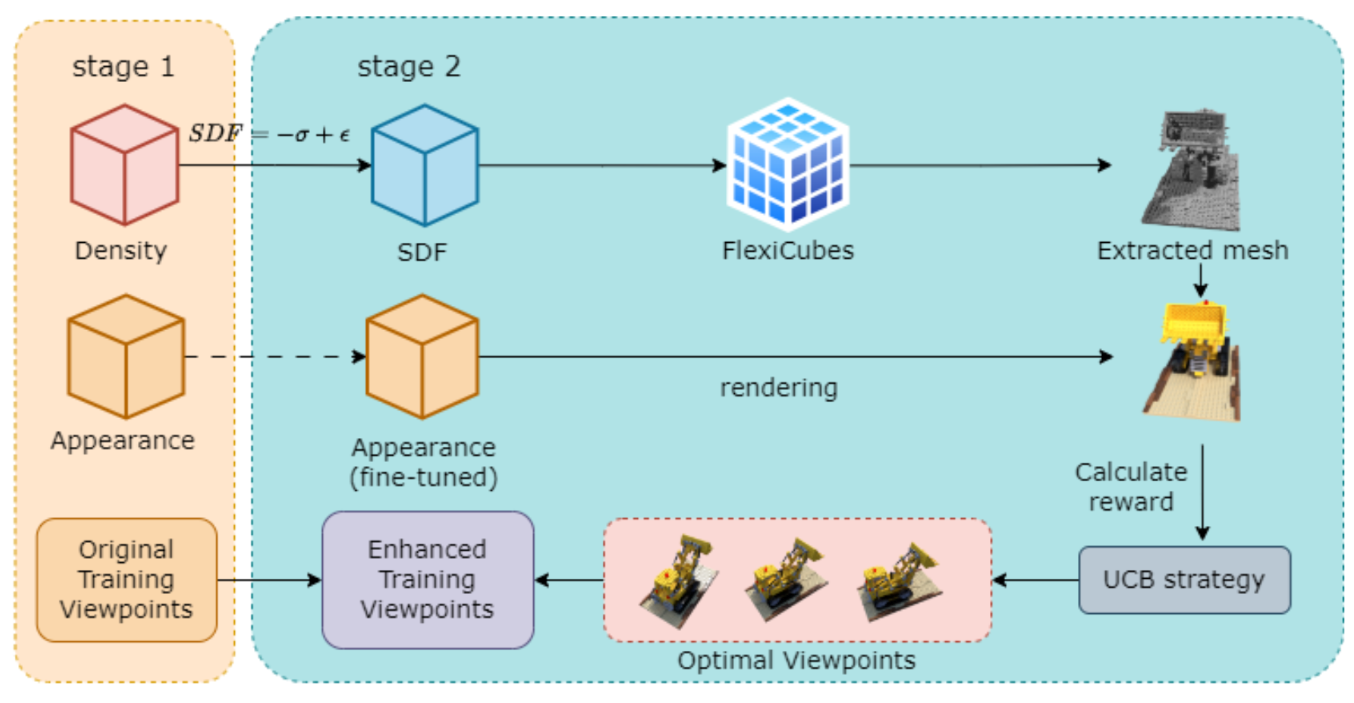

$R^2$-Mesh

强化学习不断优化从辐射场和SDF中提取的外观和网格,通过可微的提取策略

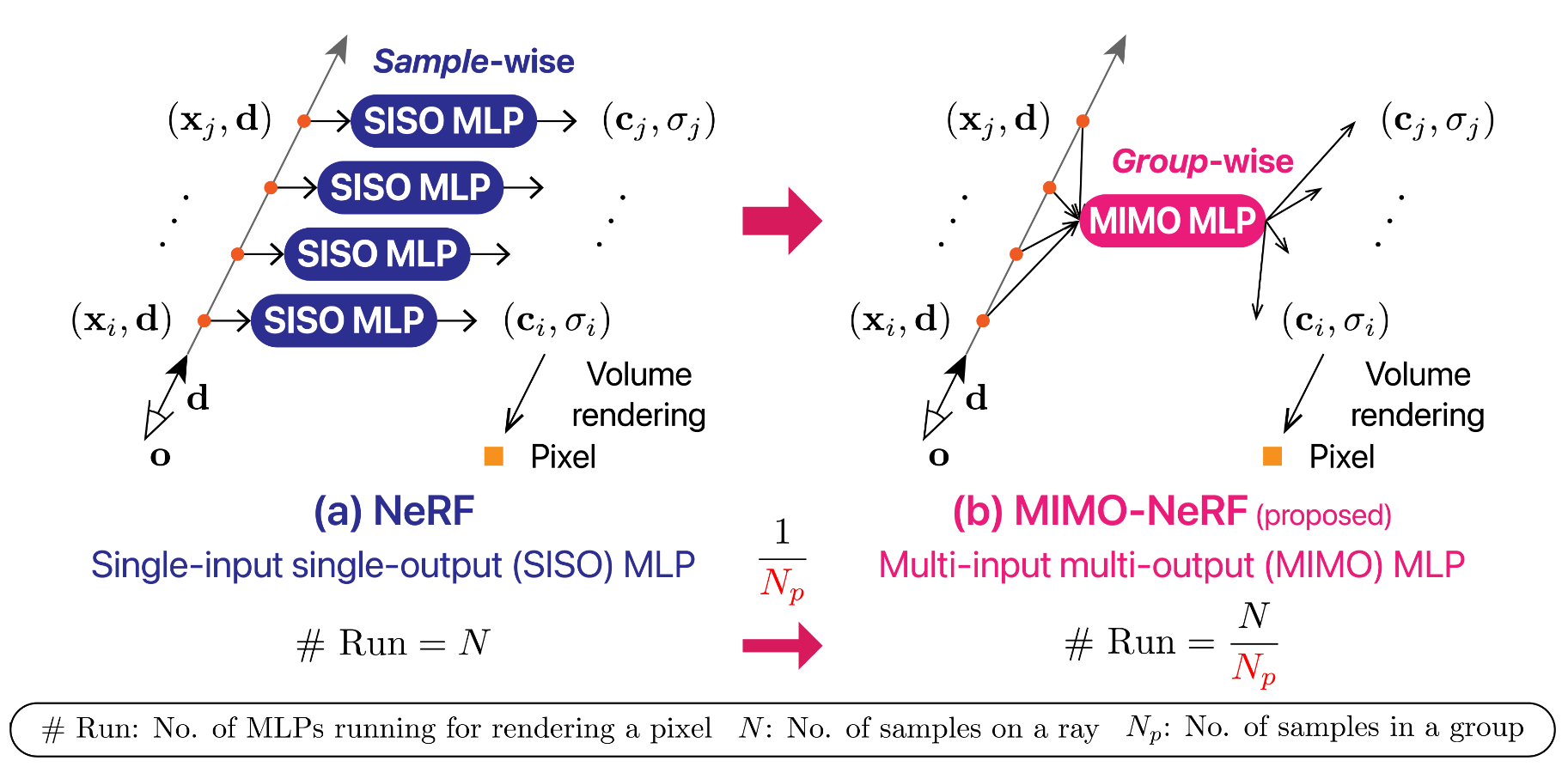

MIMO-NeRF

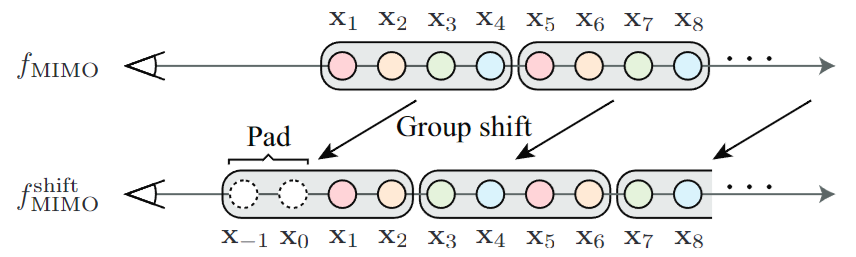

多输入多输出的MLP(NeRF),减少MLP评估次数,可以加速NeRF。但是每个点的颜色和体密度根据in a group所选的输入的坐标不同,而导致歧义。$\mathcal{L}_{pi xel}$不足以处理这种歧义,引入了self-supervised learning

Group shift. 对每个点通过多个不同的group进行评估

Variation reduction. 对每个点重复多给几次

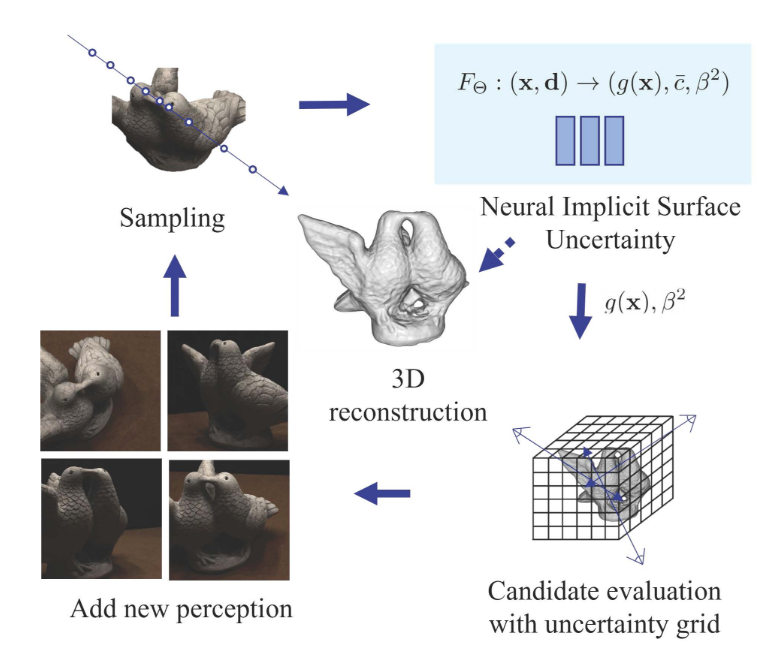

ActiveNeuS

Active Neural 3D Reconstruction with Colorized Surface Voxel-based View Selection

active 3D reconstruction using uncertainty-guided view selection考虑两种不确定性的情况下评估候选视图, introduce Colorized Surface Voxel (CSV)-based view selection, a new next-best view (NBV) selection method exploiting surface voxel-based measurement of uncertainty in scene appearance

- 相比主动选择Nest besh view,是不是image pixel of biggest error 更好一点

Binary Opacity Grids

Binary Opacity Grids: Capturing Fine Geometric Detail for Mesh-Based View Synthesis

https://binary-opacity-grid.github.io/

推进BakedSDF的工作

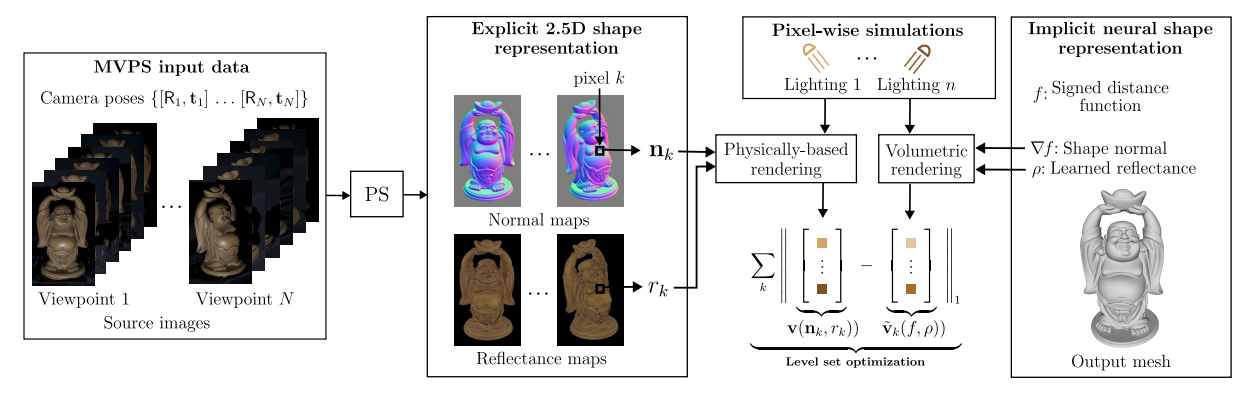

RNb-NeuS

bbrument/RNb-NeuS: Code release for RNb-NeuS. (github.com)

将反射率和法线贴图无缝集成为基于神经体积渲染的 3D 重建中的输入数据

考虑高光和阴影:显著改善了高曲率或低可见度区域的详细 3D 重建

ReTR

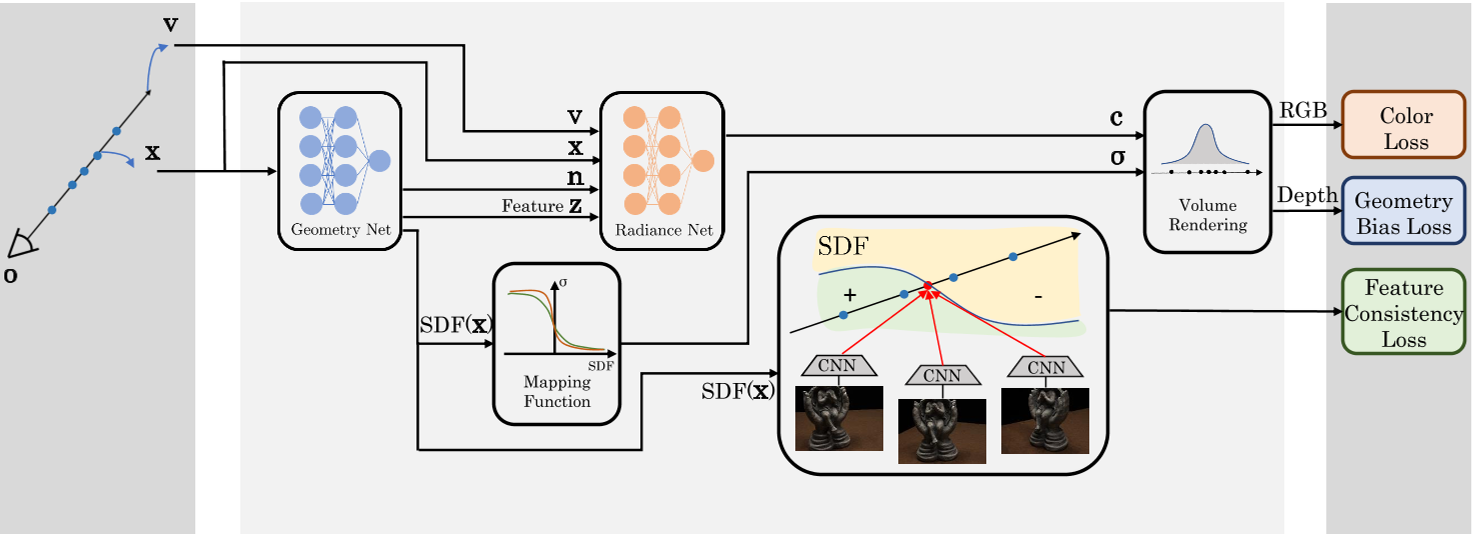

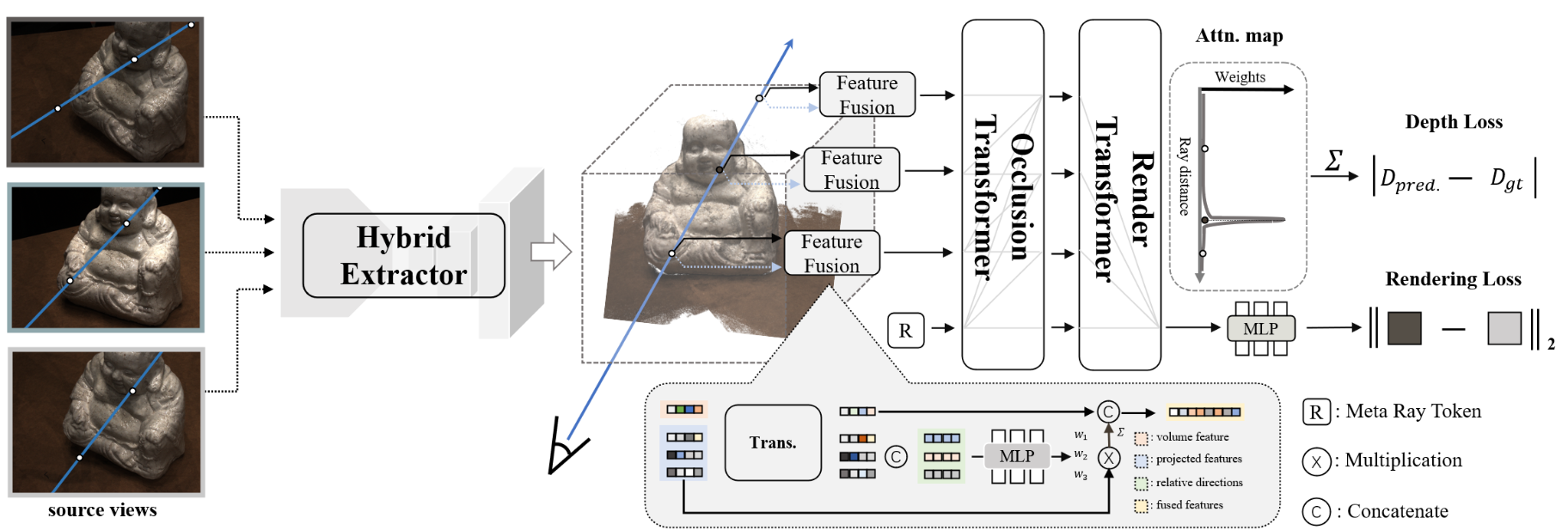

ReTR: Modeling Rendering via Transformer for Generalizable Neural Surface Reconstruction

(Generalized Rendering Function) 使用Transformer 代替渲染过程,用了深度监督

It introduces a learnable meta-ray token and utilizes the cross-attention mechanism to simulate the interaction of rendering process with sampled points and render the observed color.

- 使用Transformer 代替渲染过程,之前方法 only use the transformer to enhance feature aggregation, and the sampled point features are still decoded into colors and densities and aggregated using traditional volume rendering, leading to unconfident surface prediction。作者认为提渲染简化了光线传播的过程(吸收,激发,散射),每种光子都代表一种非线性光子-粒子相互作用,入射光子的特性受其固有性质和介质特性的影响。体渲染公式将这些复杂性(非线性)压缩在单个密度值中,导致了入射光子建模的过度简化。此外颜色的权重混合忽视了复杂的物理影响,过度依赖输入视图的投影颜色。该模型需要更广泛地累积精确表面附近各点的投影颜色,从而造成 “浑浊 “的表面外观。

- depth loss的作用更大一点,预测出来的深度图更准确

- by operating within a high-dimensional feature space rather than the color space, ReTR mitigates sensitivity to projected colors in source views.

CNN + 3D Decoder + Transformer + NeRF 用深度图监督

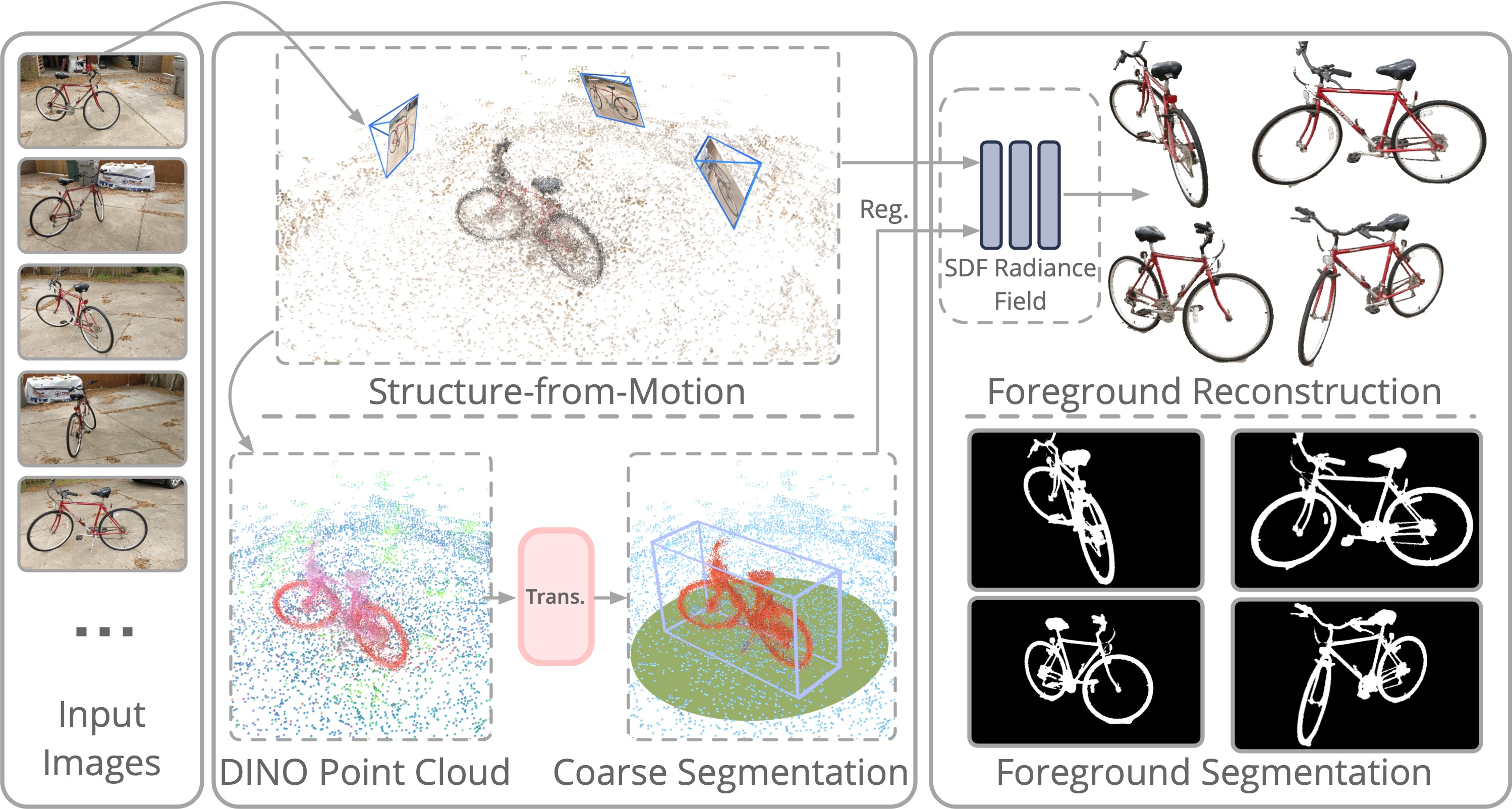

AutoRecon

AutoRecon: Automated 3D Object Discovery and Reconstruction (zju3dv.github.io)

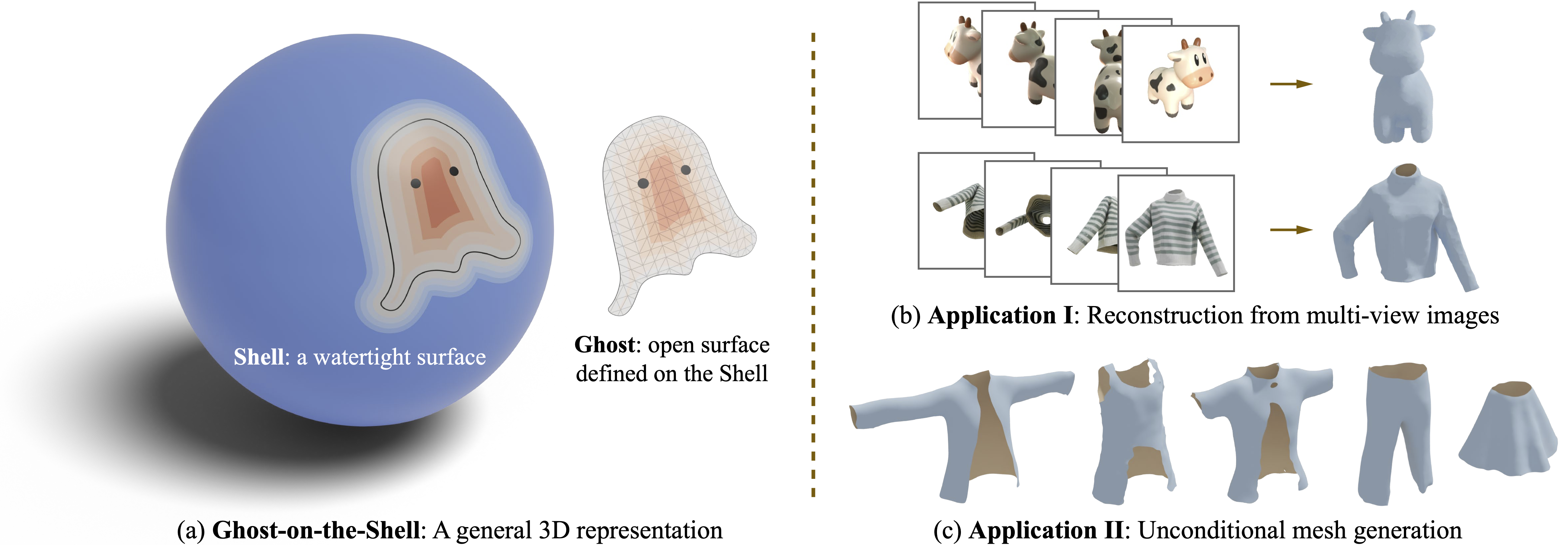

G-Shell

重建水密物体+衣服等非水密物体——通用

G-Shell (gshell3d.github.io)

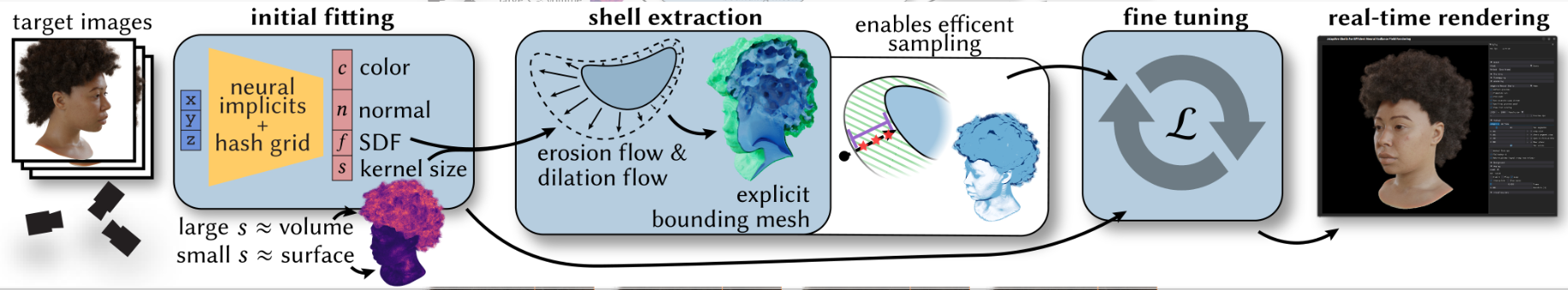

Adaptive Shells

Adaptive Shells for Efficient Neural Radiance Field Rendering (nvidia.com)

自适应使用基于体积的渲染和基于表面的渲染

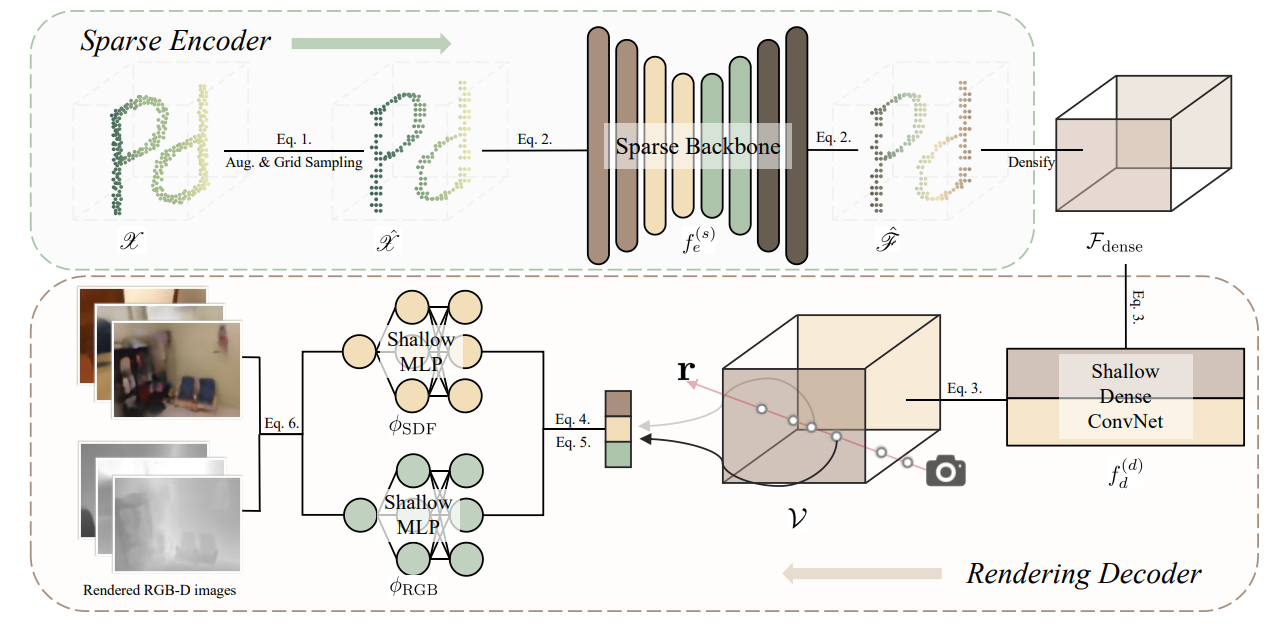

PonderV2

PointCloud 提取特征(点云编码器) + NeRF 渲染图片 + 图片损失优化点云编码器

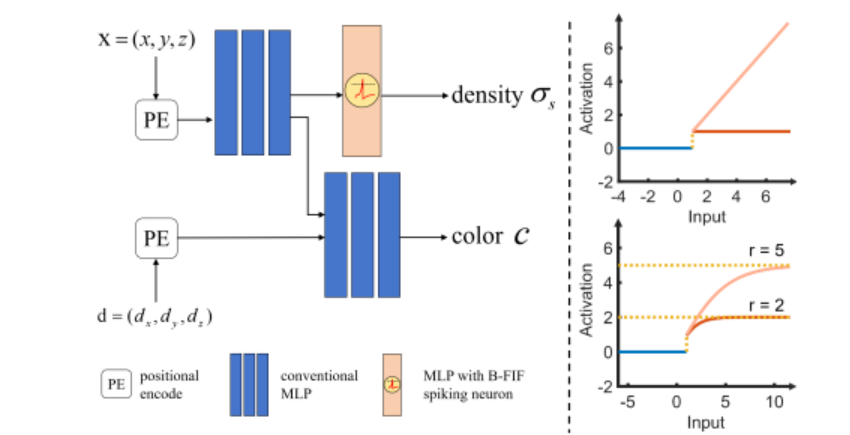

Spiking NeRF

Spiking NeRF: Representing the Real-World Geometry by a Discontinuous Representation

MLP 是连续函数,对 NeRF 网络结构的改进来生成不连续的密度场

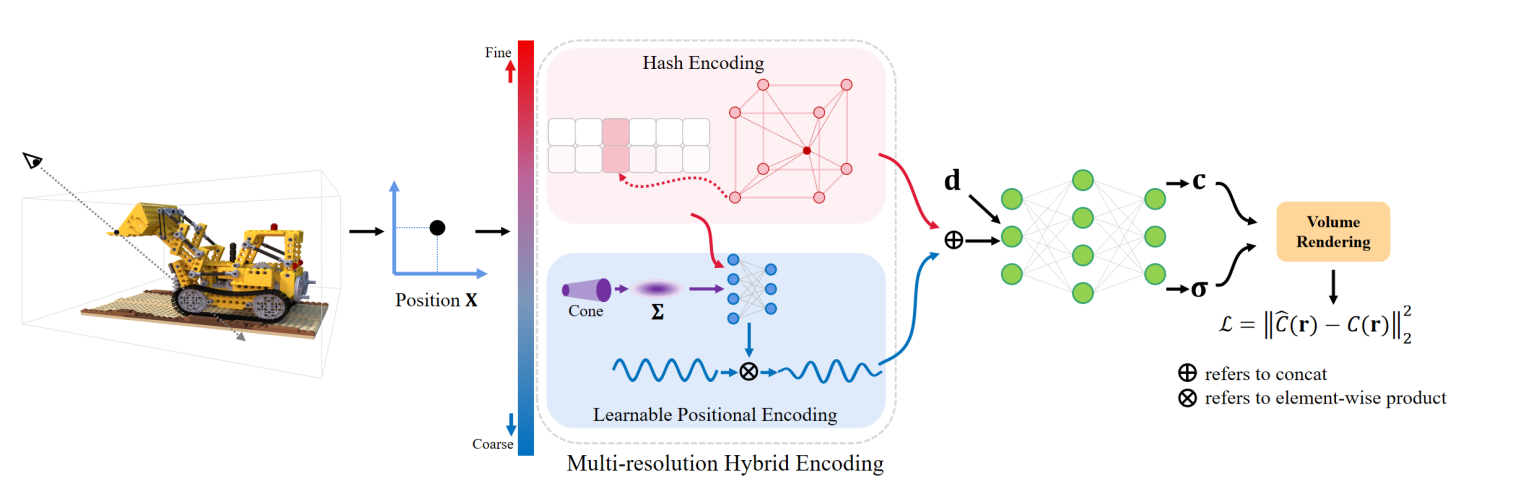

Hyb-NeRF 多分辨率混合编码

[2311.12490] Hyb-NeRF: A Multiresolution Hybrid Encoding for Neural Radiance Fields (arxiv.org)

多分辨率混合编码

Dynamic

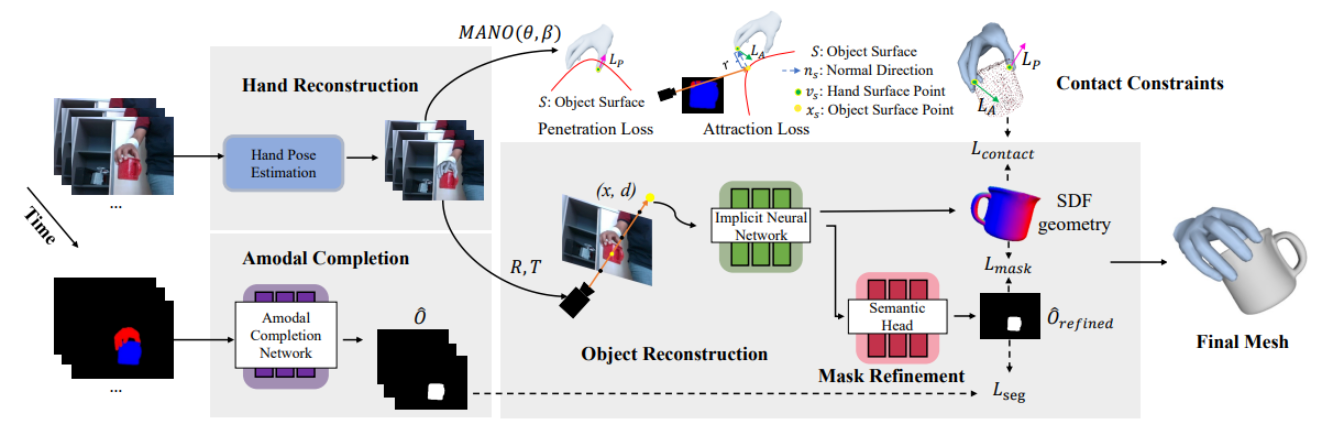

In-Hand 3D Object

In-Hand 3D Object Reconstruction from a Monocular RGB Video

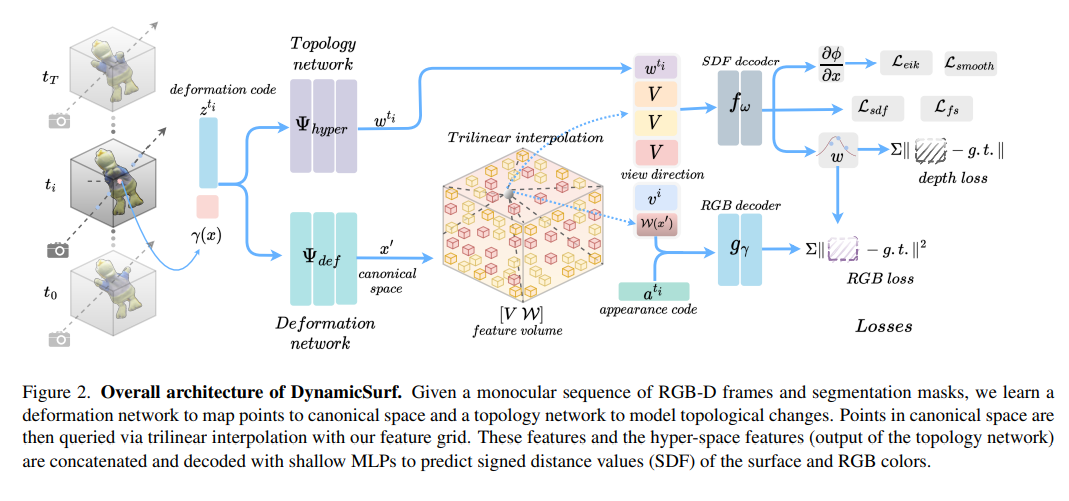

DynamicSurf

DynamicSurf: Dynamic Neural RGB-D Surface Reconstruction with an Optimizable Feature Grid

单目 RGBD 视频重建 3D

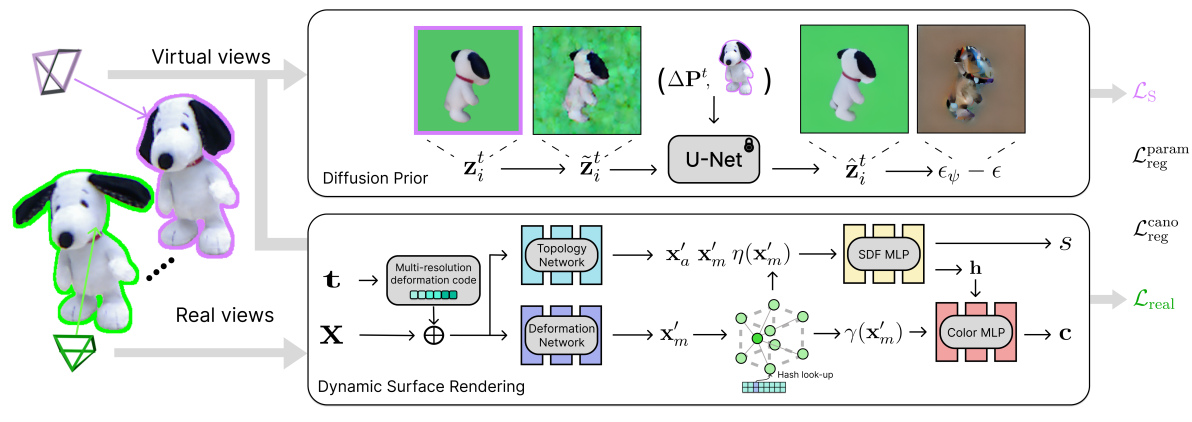

MorpheuS

MorpheuS (hengyiwang.github.io)

MorpheuS: Neural Dynamic 360° Surface Reconstruction from Monocular RGB-D Video

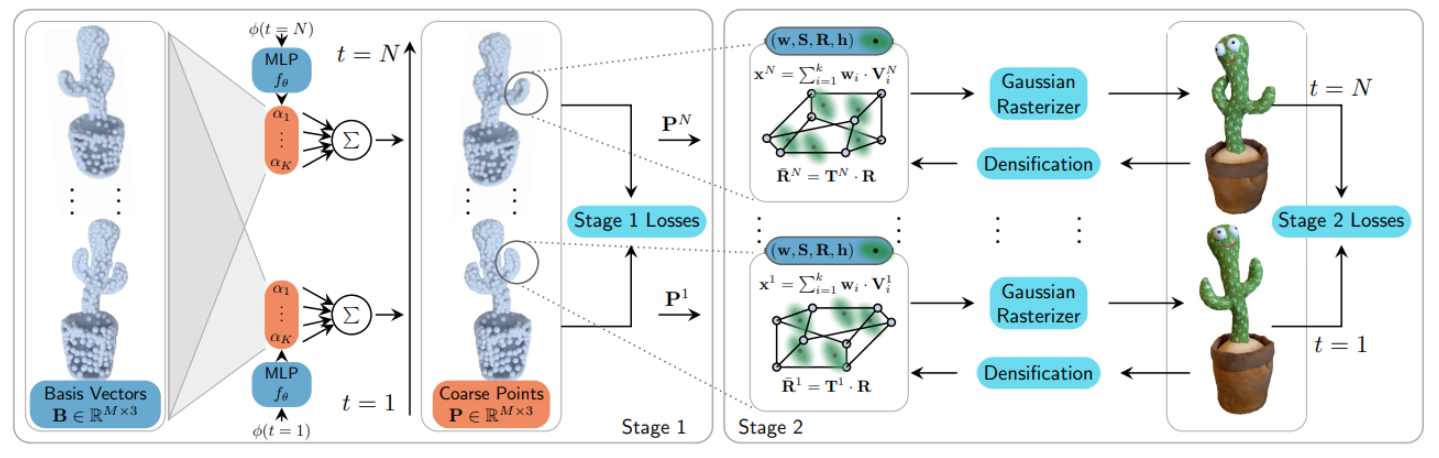

NPGs

[2312.01196] Neural Parametric Gaussians for Monocular Non-Rigid Object Reconstruction (arxiv.org)

3DGS (Rasterize+Primitives)

A Survey on 3D Gaussian Splatting

Recent Advances in 3D Gaussian Splatting

Idea

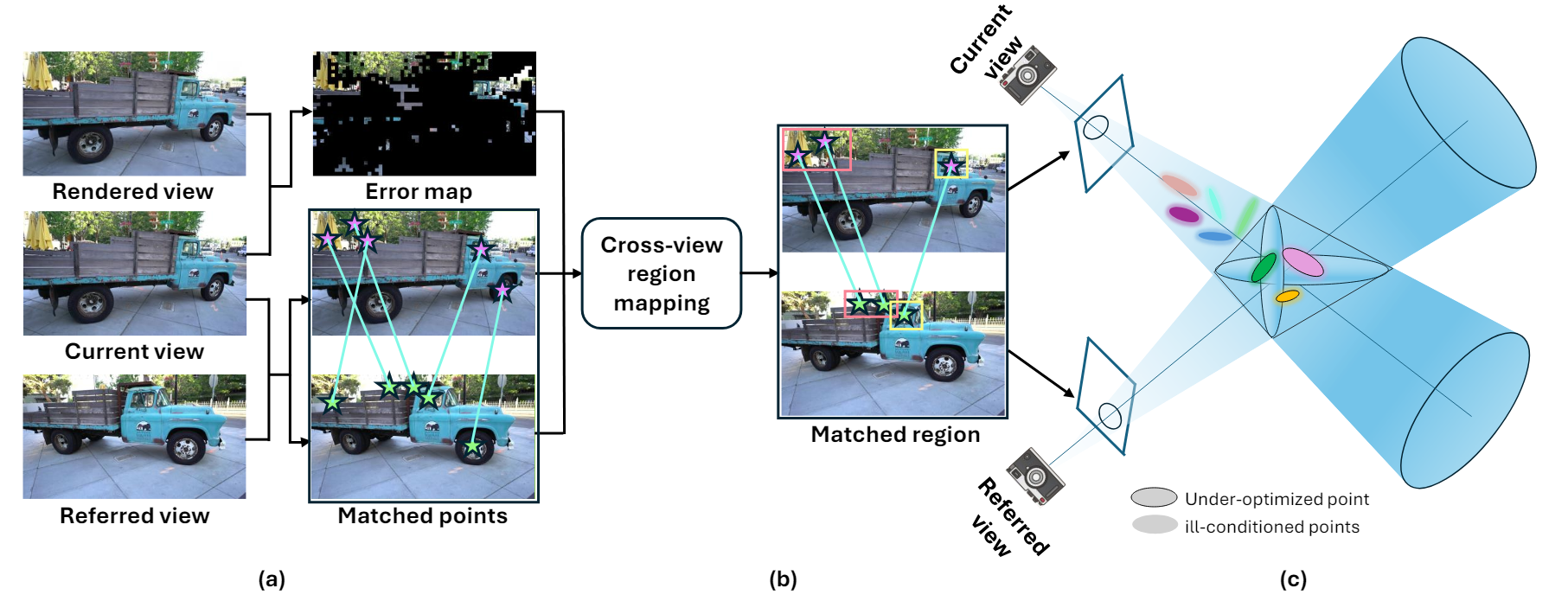

Localized Points Management

Gaussian Splatting with Localized Points Management

局部点管理。目的是优化新视图生成的质量,针对生成效果不好的局部部位加强管理。(Error map,cross-view region)

但是对于NeRF这种隐式重建(or新视图生成)方法来说,增强某些弱的重建部位,可能会影响其他已经重建好的部位。能否将弱的重建部位用另一个网络进行重建:得先知道哪些点是需要优化的点(error map上的点)

- 局部优化思想

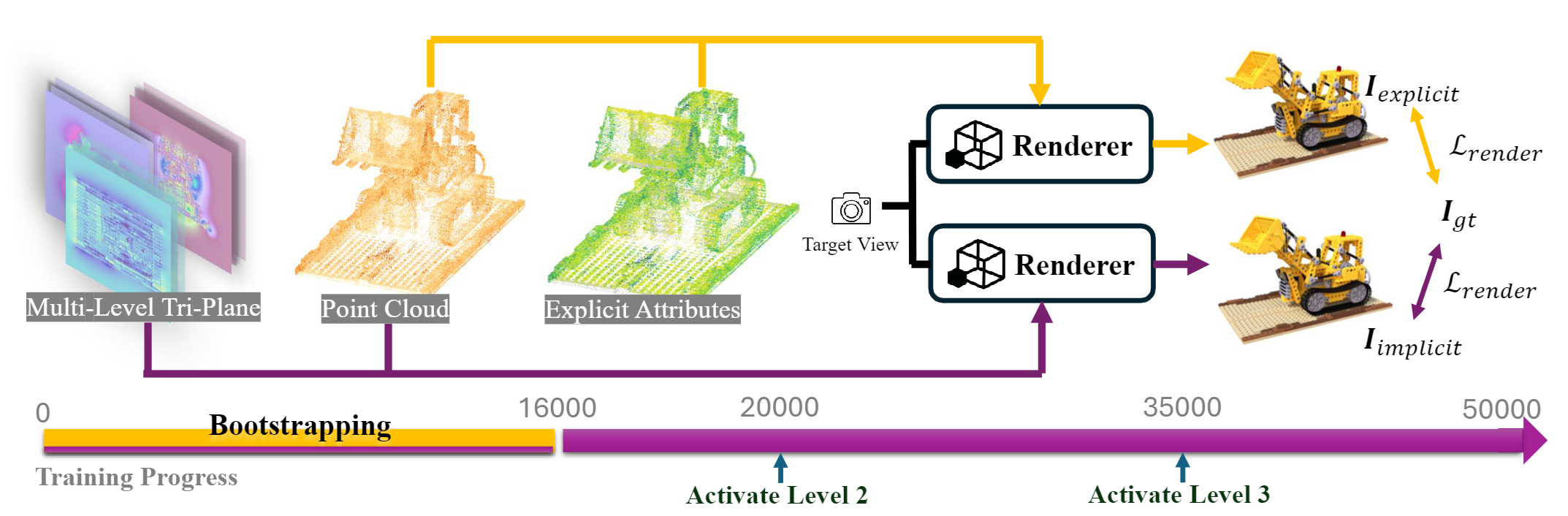

IGS

Implicit Gaussian Splatting with Efficient Multi-Level Tri-Plane Representation

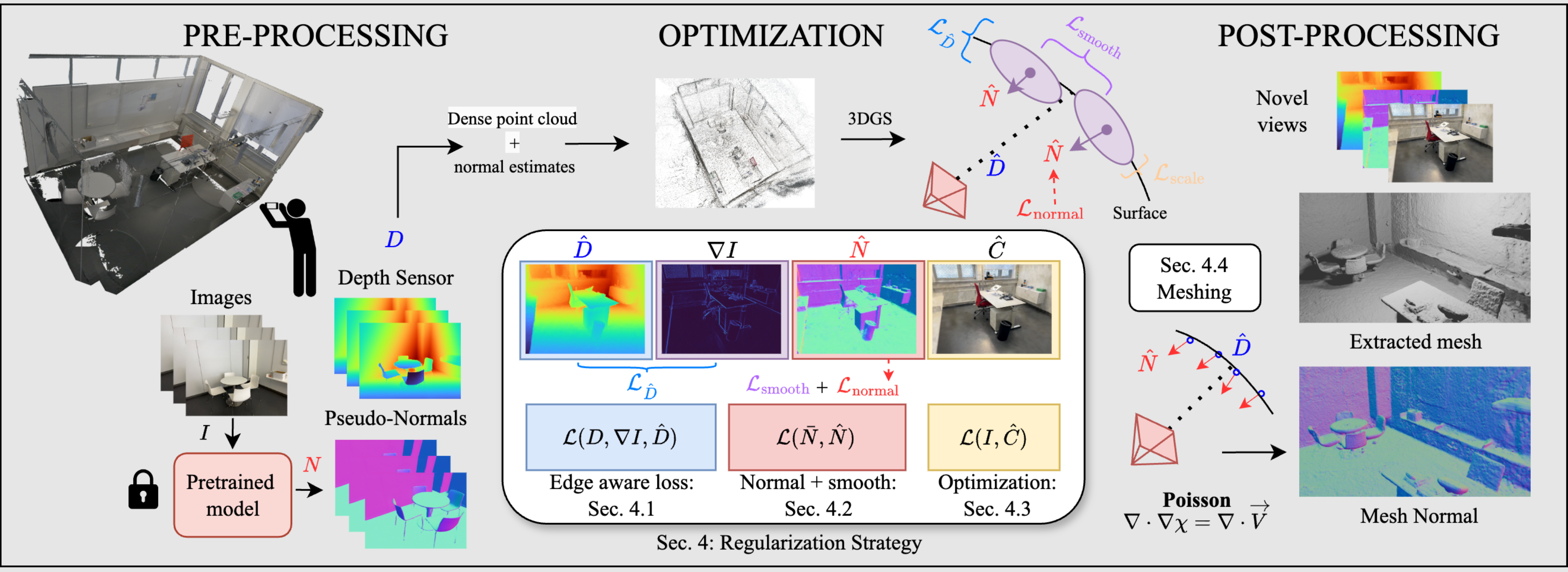

DN-Splatter

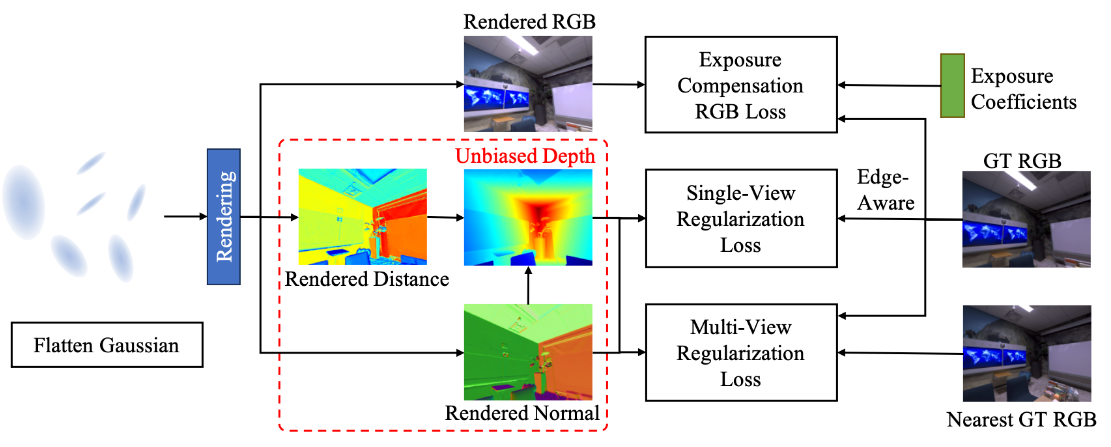

DN-Splatter: Depth and Normal Priors for Gaussian Splatting and Meshing

Indoor scene,融合了来自handheld devices和general-purpose networks的深度和法向量先验

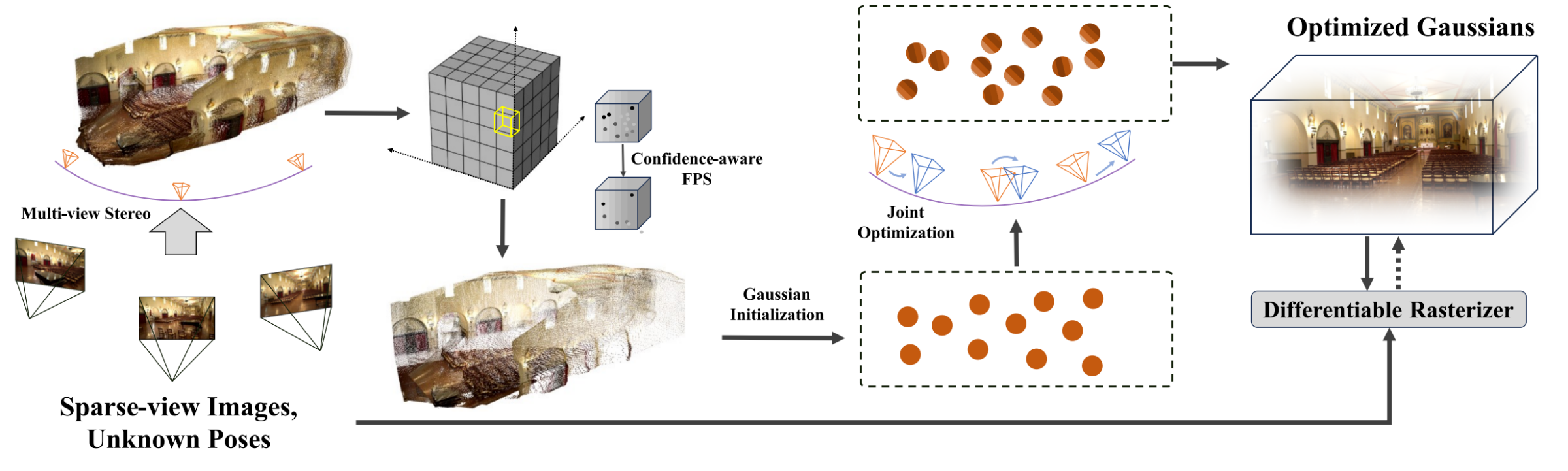

InstantSplat(Sparse-view)

InstantSplat: Sparse-view SfM-free Gaussian Splatting in Seconds

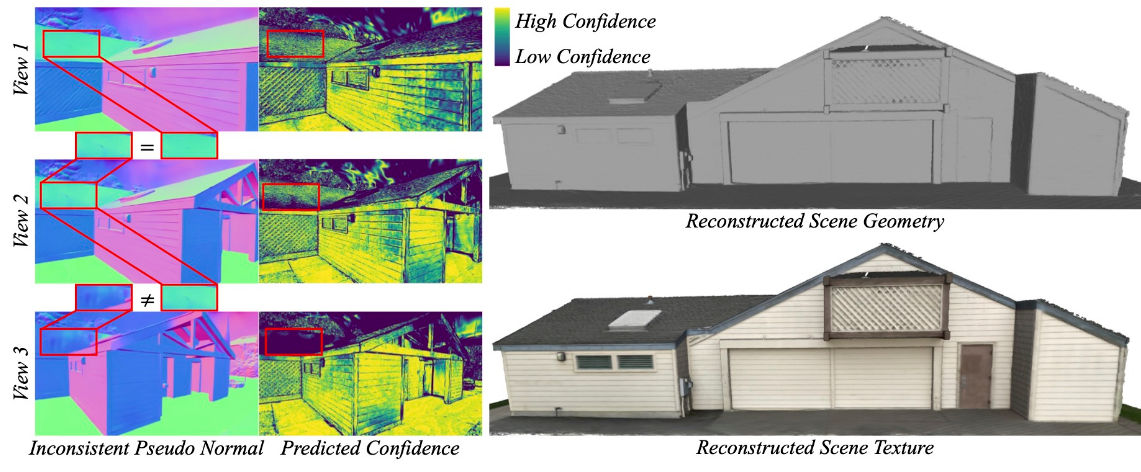

VCR-GauS

PGSR

PGSR: Planar-based Gaussian Splatting for Efficient and High-Fidelity Surface Reconstruction

TrimGS

- Gaussian Trimming

- Scale-driven Densification

- Normal Regularization

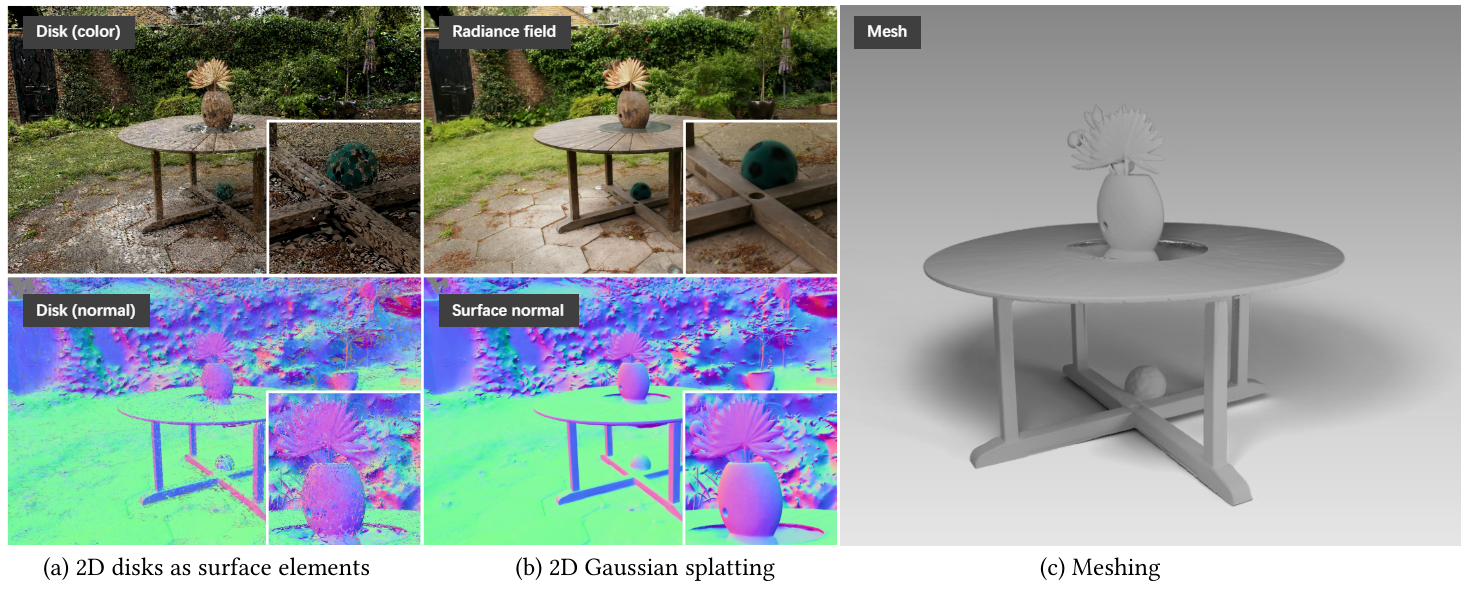

SuGaR

3D Gaussian Splatting 提取mesh

2DGS

and unofficial implementation TimSong412/2D-surfel-gaussian

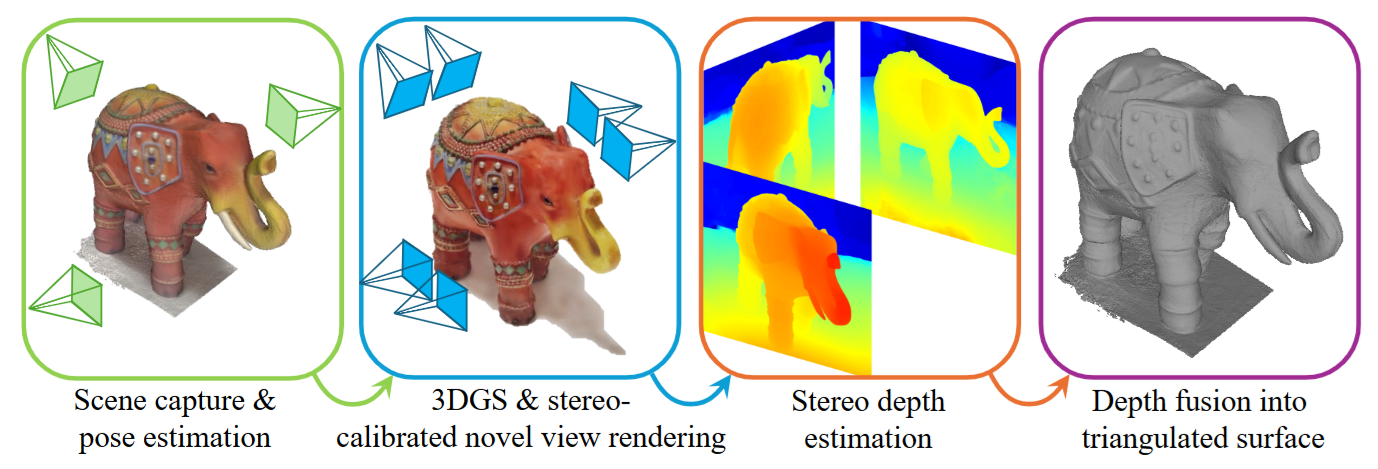

GS2mesh

Surface Reconstruction from Gaussian Splatting via Novel Stereo Views

EVER (VR+Primitives)

Exact Volumetric Ellipsoid Rendering - Alexander Mai’s Homepage

使用Volume Rendering(NeRF) 的方式来渲染primitive based representation(3DGS)

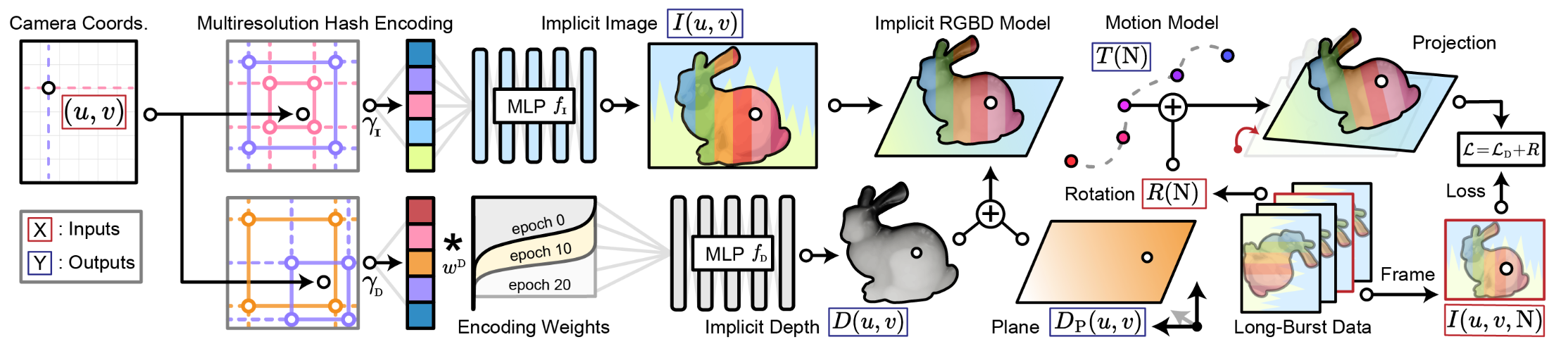

Depth-based

Shakes on a Plane

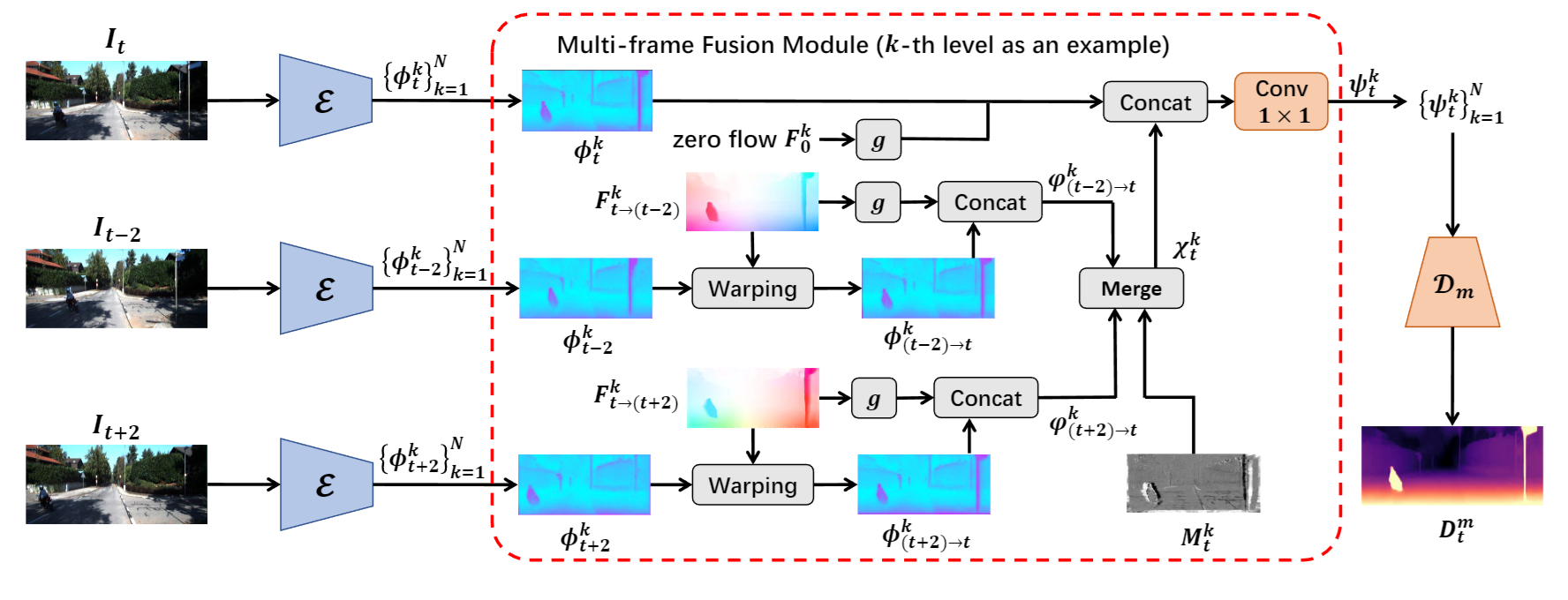

Mono-ViFI

Mesh-based

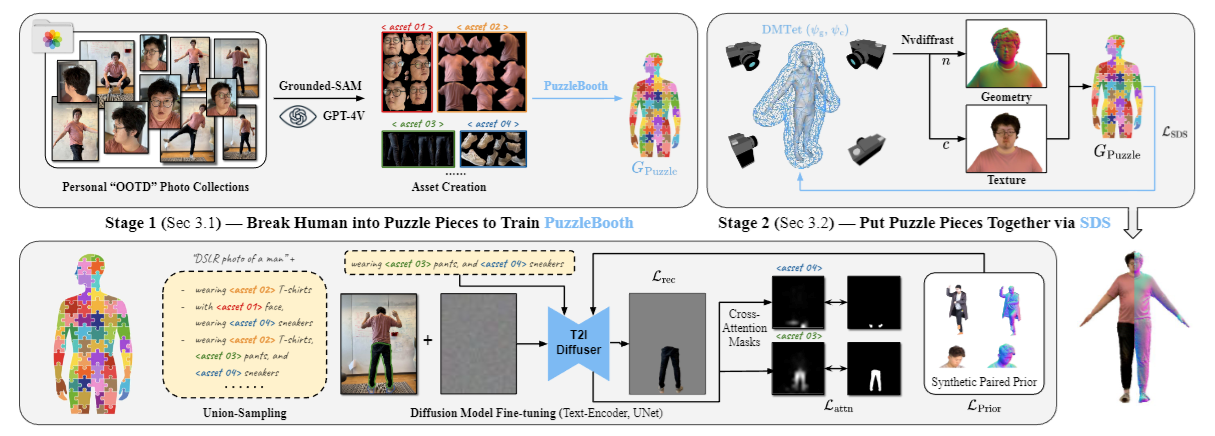

PuzzleAvatar

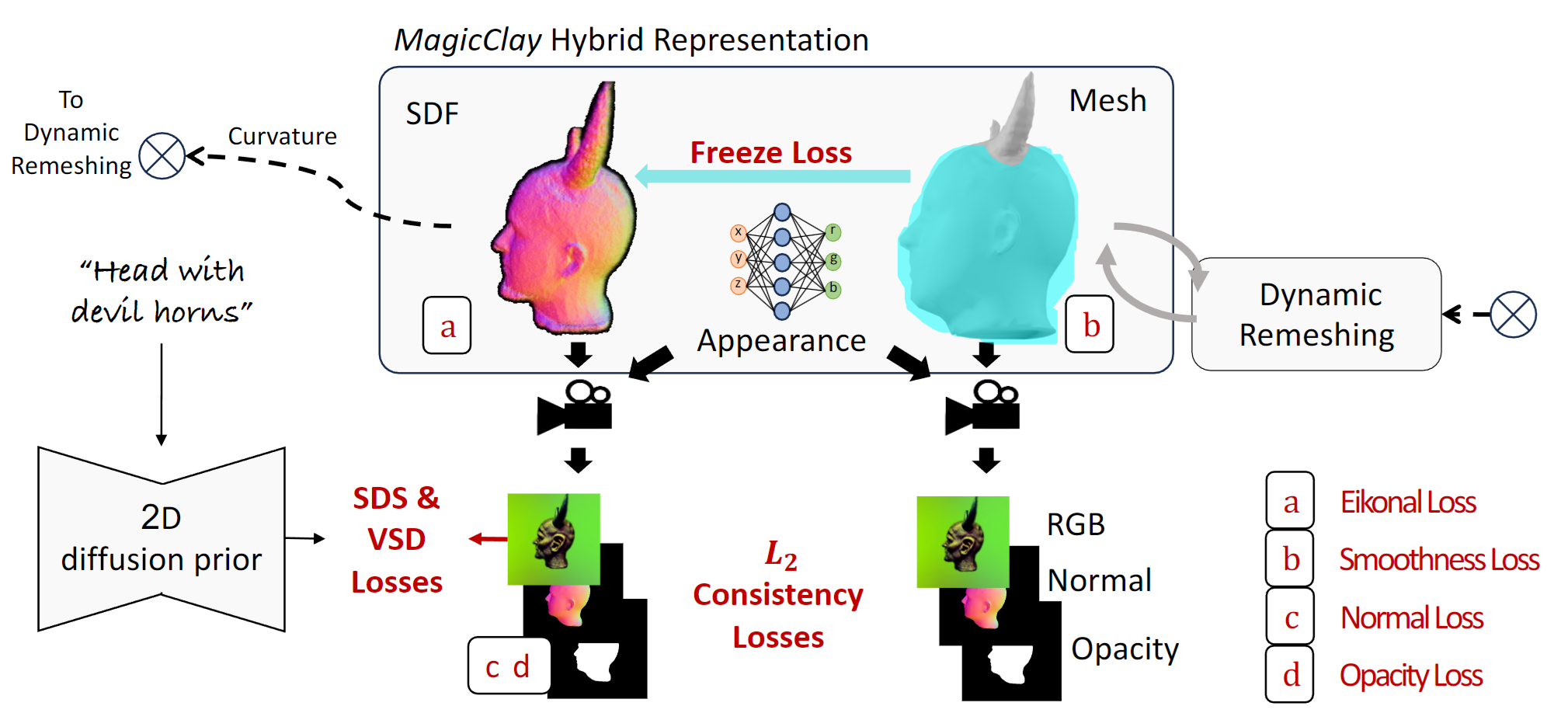

MagicClay

MagicClay: Sculpting Meshes With Generative Neural Fields

- ROAR: Robust Adaptive Reconstruction of Shapes Using Planar Projections 使用该方法将SDF更新到mesh 的local topology上

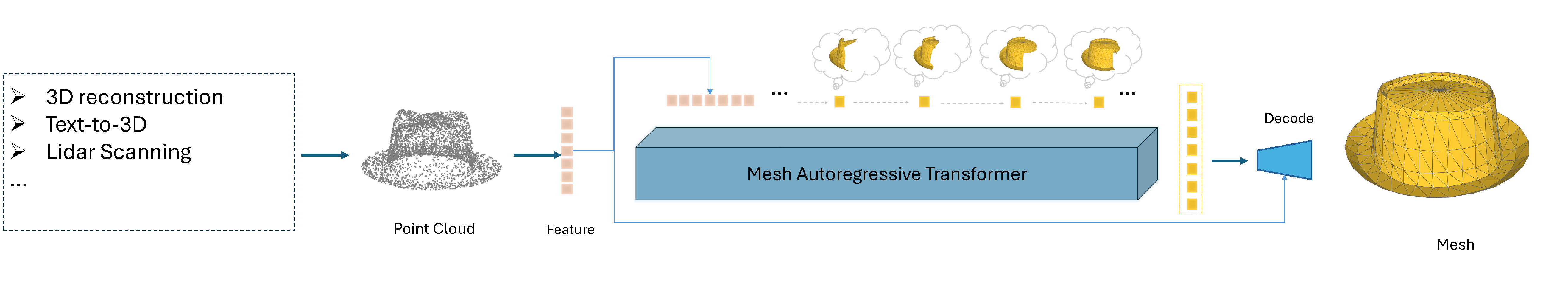

MeshAnything

与 MeshGPT 等直接生成艺术家创建的网格Artist-Created Meshes (AMs)的方法相比,我们的方法避免了学习复杂的 3D 形状分布。相反,它专注于通过优化的拓扑有效地构建形状,从而显着减轻训练负担并增强可扩展性。

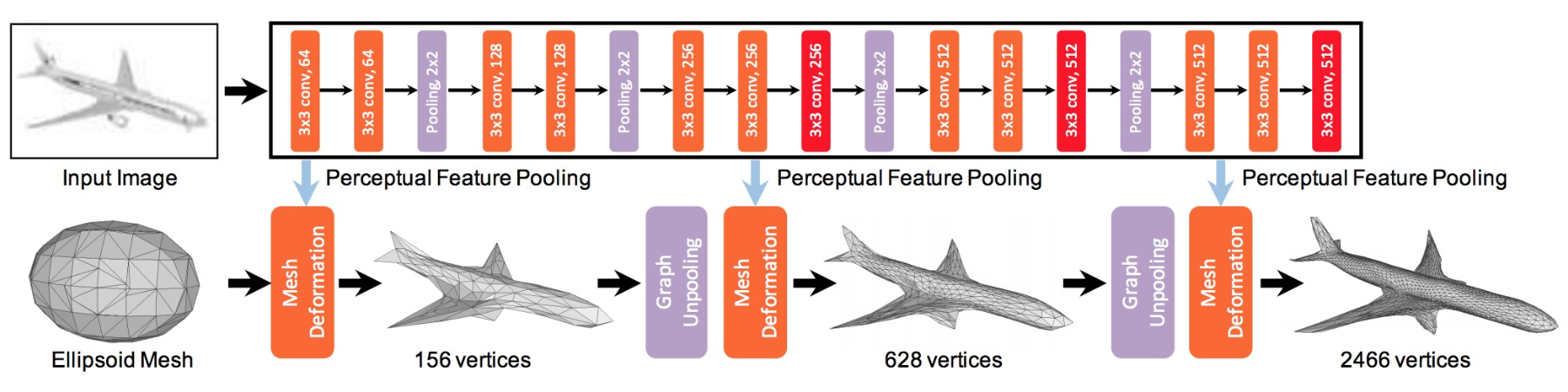

Pixel2Mesh

Pixel2Mesh (nywang16.github.io)

Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images (readpaper.com)

我们提出了一种端端深度学习架构,可以从单色图像中生成三角形网格中的3D形状。受深度神经网络特性的限制,以前的方法通常是用体积或点云表示三维形状,将它们转换为更易于使用的网格模型是很困难的。与现有方法不同,我们的网络在基于图的卷积神经网络中表示3D网格,并通过逐步变形椭球来产生正确的几何形状,利用从输入图像中提取的感知特征。我们采用了从粗到精的策略,使整个变形过程稳定,并定义了各种网格相关的损失来捕捉不同层次的属性,以保证视觉上的吸引力和物理上的精确3D几何。大量的实验表明,我们的方法不仅可以定性地产生具有更好细节的网格模型,而且与目前的技术相比,可以实现更高的3D形状估计精度。

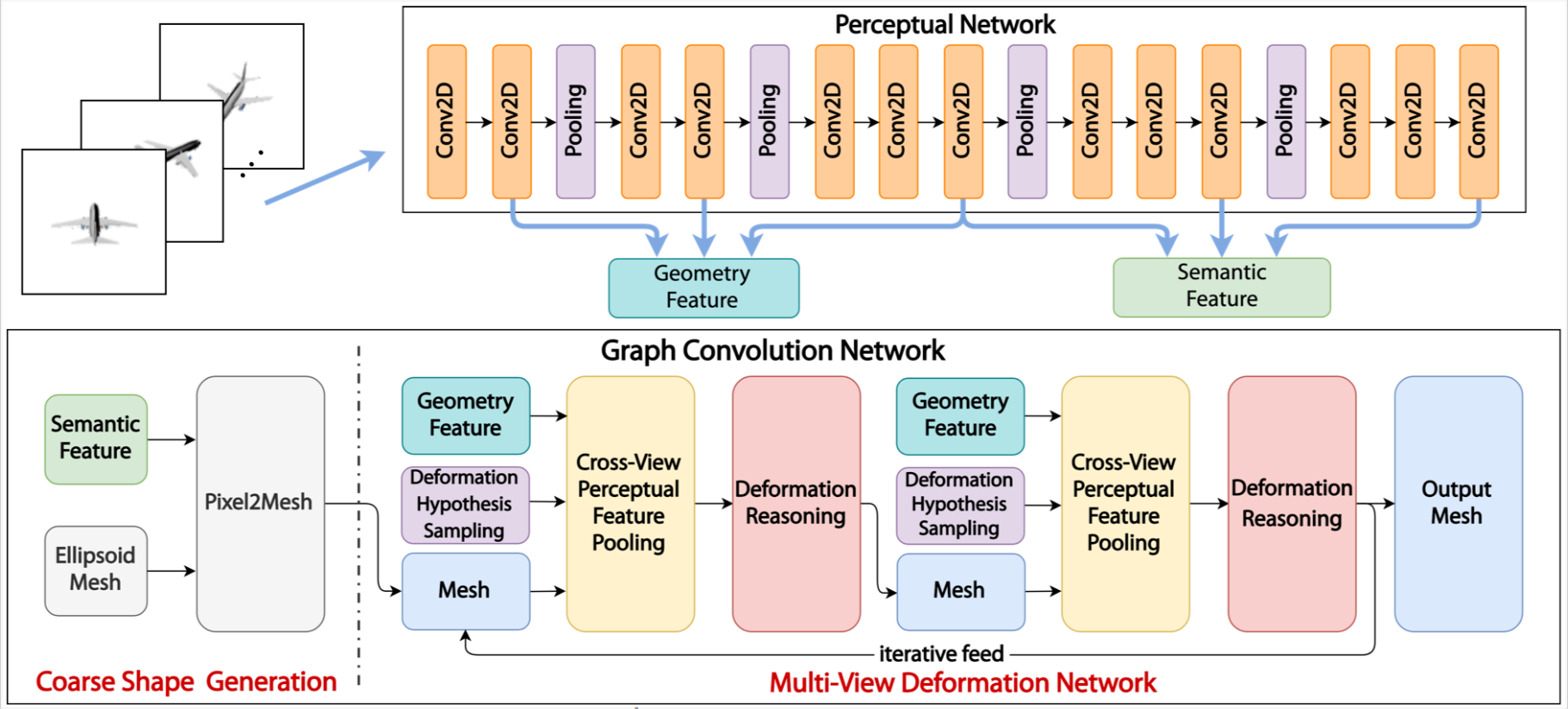

Pixel2Mesh++

Pixel2Mesh++ (walsvid.github.io)

Voxel-based

Voxurf